The Questions That Will Build the Next Generation of AI Founders

How asking better questions—not chasing answers—will define the next generation of AI founders.

When Jordan Fisher, co-founder and former CEO of Standard AI, took the stage at AI Startup School on June 17, 2025, he didn’t project certainty — he opened with confusion.

“I’m probably more confused than I’ve ever been in my entire life,” he admitted. “But when you’re confused, that’s the start of something interesting.”

Now leading an AI alignment research team at Anthropic, Fisher urged founders to reframe their thinking: stop chasing quick answers and start asking better questions. In a world where AGI may be only a few years away, he believes this mindset is not optional — it’s essential for survival.

“If there was ever a time to stop and ask questions, it’s probably right now.”

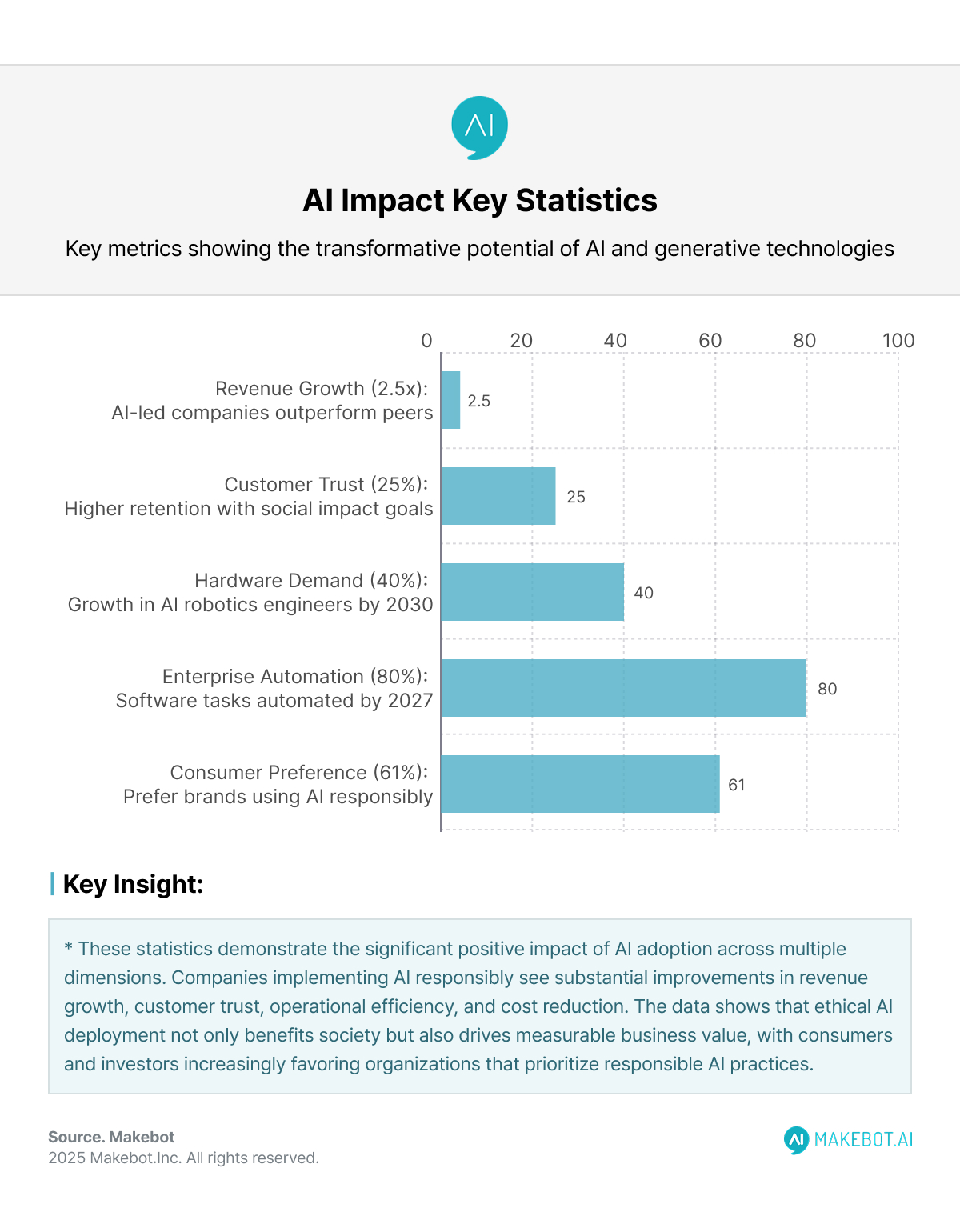

Accenture: Companies with AI-led Processes Outperform Peers by 2.5x in Revenue Growth. Read more here!

1. Should You Even Start a Company Right Now?

Fisher’s first question hit every founder in the gut: Should you even start a startup right now?

Throughout his career, he said, foresight was a competitive edge — he could “see five or ten years ahead.” But now?

“I can see for maybe three weeks or less. I really just don’t know what’s going to happen.”

That uncertainty, he argued, defines the current entrepreneurial era. With Generative AI and Large Language Models evolving faster than business models can adapt, founders must rethink everything — from strategy to hiring to ethics. He advised startups not to plan only for today’s capabilities, but to build for a world two years out — when AGI could realistically emerge.

“If you’re not thinking about how AGI will change hiring, marketing, and go-to-market strategies, you’re not doing your job as a founder.”

And yet, amid the chaos, Fisher finds optimism. Founders, he says, are uniquely positioned for this moment because “it’s literally your job to answer every single question, all the time.” According to McKinsey’s AI and the Future of Work 2025 report, 55% of global executives now believe AGI will meaningfully impact their industries by 2027 — double the rate of 2023.

2. When Software Becomes Commoditized

Fisher then posed another uncomfortable question: What happens when software itself becomes a commodity? He described a plausible near future where AI Chatbots and code-generation agents can instantly create applications on demand — no developers, no app stores.

“Maybe consumers will stop downloading apps altogether. They’ll just say, ‘I want my phone to do something,’ and the AI makes it happen. What’s the point of downloading an app at that point?”

This scenario challenges the entire SaaS model. Yet Fisher acknowledged that commoditization doesn’t automatically erase opportunity — it raises the bar.

“Anyone will be able to make the equivalent of today’s apps by prompting. But can you make an exceptional app tomorrow? The kind that only great human-AI teams can build?”

That distinction, he argued, is where defensibility lies. Startups must go beyond functionality — they must design exceptionality. Gartner projects that 80% of enterprise software tasks will be automated through Generative AI tools by 2027, but only 22% of firms will achieve “sustained differentiation” through quality and trust.

3. Building AI-Native Teams and Cultures

When asked how AI-native teams will differ from traditional ones, Fisher warned that “smaller teams” doesn’t necessarily mean “safer” or “better.”

“In a semi-automated world, a single person could make a decision that changes the entire product — and no one else might even be aware of it.”

Trust, therefore, became the core currency of the AI era. The traditional guardrails — diverse employees, human oversight, whistleblowers — will erode as automation scales. He proposed a radical alternative: AI-powered audits. These systems could continuously inspect a company’s decisions, communications, and code against declared ethical standards — then delete themselves to preserve privacy.

“We need to figure out how to instill trust not just in the models, but in the agents — and in the companies building those agents.”

This concept reframes governance as technology itself. In Fisher’s view, trust in architecture will soon matter more than brand reputation. According to Dataiku’s Global AI Confessions Report, 74% of CEOs fear losing their jobs within two years if they fail to deliver AI-driven results, underscoring how leadership survival now depends on AI execution and governance. This aligns with Jordan Fisher’s warning that in AI-native teams, smaller or more automated structures won’t guarantee safety — a single unchecked action could reshape entire systems.

As traditional oversight weakens, both reports point to a shared truth: trust must be engineered, not assumed. From AI-powered audits to transparent governance, the future of leadership will hinge on building trustworthy architectures and accountable AI cultures, where confidence in the system itself becomes the ultimate measure of stability and success.

4. Alignment as Both Ethical and Economic Pressure

Fisher’s alignment perspective blended moral philosophy with startup pragmatism. Alignment isn’t only about controlling superintelligence — it’s about ensuring business viability.

“If you’re going to trust an LLM to work for a day, or a week, before you check its output, you better have some degree of certainty it’s not going off the rails.”

He noted that long-horizon Large Language Models will only become economically useful once they are safe and predictable enough to handle tasks unsupervised. This creates a unique pressure — progress on AI alignment will accelerate not just for existential reasons, but because the market demands it.

“There’s good news here,” he added. “Economic pressure is a great forcing function for safety progress.”

In other words, financial incentives might finally align with ethical ones — at least temporarily.

Fisher reframed AI alignment as both a moral and economic imperative — not just about controlling superintelligence, but ensuring business survival in an era of autonomous systems. He warned that trusting Large Language Models to operate unsupervised requires more than technical confidence; it demands governance, predictability, and accountability.

As companies scale AI into decision-making, alignment becomes the new frontier of competitiveness — where safety, transparency, and trust directly impact profitability. Fisher argued that this convergence of ethical responsibility and market pressure will accelerate progress faster than regulation alone. In his words, “economic pressure is a great forcing function for safety progress,” signaling that the next phase of innovation will belong to founders who treat AI ethics as infrastructure, aligning purpose with performance.

5. Defensibility in a Post-AGI World

Fisher challenged founders to identify what will remain hard — even when intelligence is cheap.

“If I can prompt GPT-7 or Claude 7 to replicate your startup, what’s your advantage?”

His answer: hard problems — energy, infrastructure, manufacturing, robotics, semiconductors — anything that resists full automation.

“I like solving hard problems. Everyone has a different moat. Some people are great at marketing; mine is building what’s still hard after AGI.”

He urged investors and entrepreneurs to orient around this “defensibility thesis.” Software may commoditize, but physical systems, logistics, and domain-specific expertise will retain value far longer.

Fisher urged founders to focus on “defensibility after intelligence becomes cheap,” emphasizing that true value will lie in solving problems that resist full automation — such as energy, infrastructure, robotics, and semiconductor innovation. As he warned, “If I can prompt GPT-7 or Claude 7 to replicate your startup, what’s your advantage?” His point reflects a growing investor concern: as Generative AI commoditizes software creation, differentiation will depend on expertise, physical systems, and proprietary data.

Similarly, Goldman Sachs projects that by 2030, AI automation could replace up to 25% of routine software tasks, while demand for AI-integrated robotics and hardware engineers will rise by over 40%. In Fisher’s view, startups that anchor themselves in these “hard-to-automate” domains will define the next durable wave of innovation — where defensibility is built not on code, but on complexity itself.

McKinsey Report: How Generative AI is Reshaping Global Productivity and the Future of Work. More here!

6. The New Neutrality Question

Perhaps Fisher’s most thought-provoking segment came when he asked: Who decides what AIs can and cannot do?

He likened the situation to infrastructure monopolies:

“If GE owned the entire electrical grid and said you can only plug in GE toasters, we’d call that absurd. But that’s what’s happening with AI models.”

As the world consolidates around a few major Large Language Models, he warned that their embedded policies could shape — or limit — entire industries. The future might require a new concept: AI neutrality.

Just as society enforces neutrality in electricity, water, and internet access, AI systems might need similar principles to ensure fair competition and open innovation.

Fisher’s call for AI neutrality highlighted one of the most urgent governance challenges of the decade: determining who controls what AIs can and cannot do. Comparing the current landscape to infrastructure monopolies, he warned that as power concentrates around a few dominant Large Language Models (LLMs), their internal policies could quietly dictate which products, voices, or innovations reach the market.

This concern is already evident — as of 2025, According to Epoch AI’s 2025 analysis, global AI compute capacity is rapidly concentrating, with AI workloads projected to consume 70% of all data-center resources by 2030, largely driven by hyperscale providers such as OpenAI, Anthropic, Google DeepMind, Meta, and Amazon, which collectively dominate large-model training infrastructure.

Meanwhile, AI’s scale and dependency are growing at unprecedented speed, with AI training computers increasing 300,000-fold since 2012 and 41% of all global code now AI-generated, as noted in the Epoch’s 2025 analysis. This concentration of power means that the embedded policies and moderation layers of a few LLMs could shape — or limit — innovation across entire industries.

Fisher’s call for AI neutrality, therefore, echoes a broader societal need for open, transparent, and competitive AI ecosystems — where intelligence functions as a shared utility, not a proprietary monopoly.

7. From “Build Something People Want” to “Build What Society Needs”

In one of his most emotional moments, Fisher returned to Silicon Valley’s founding ethos.

“We used to say, ‘I’m trying to change the world.’ It sounded cringe, but the founders really meant it.”

Now, he fears many have replaced that ambition with panic and profit-seeking.

“People hear about AGI and their first question is, ‘How do we make money off this?’ That’s deeply disappointing.”

He doesn’t dismiss financial success — in fact, he jokes, “I hope you make money while you can.” But he insists founders use this period to create something lasting.

“This might be the last product you build before AGI changes everything. Make sure it’s something worth surviving that change.”

He redefined Y Combinator’s classic mantra — Build something people want — into a new moral compass:

“People want things that are good for society. Don’t just ask what users will consume — ask what the world truly needs.”

Fisher closed with a powerful reframing of Silicon Valley’s ethos — from “Build something people want” to “Build what society needs.” He urged founders to move beyond short-term profit and address the long-term impact of AGI, warning that the next generation of products may define humanity’s relationship with intelligence itself.

His sentiment aligns with growing data showing a public appetite for ethical and socially responsible AI: according to a survey, 61% of global consumers now prefer brands that “use Generative AI responsibly for societal good,” while 56% of investors say a company’s AI ethics and governance directly influence their funding decisions.

Likewise, Another Survey found out that organizations with clear social-impact goals see 25% higher customer trust and retention. Fisher’s call captures this shift — that in the AGI era, the startups that endure won’t just solve market problems; they’ll build systems that align with human values, advancing both innovation and the collective good.

8. Information Diets and Thinking Independently

In the audience Q&A, when asked where he finds clarity amid confusion, Fisher gave a surprisingly grounded answer:

“Honestly, Twitter. But I’m religious about curating it. Follow people with good takes, unfollow dumb ones. Your information diet determines your mental model.”

He compared it to reinforcement learning — balancing exploration and exploitation. Founders, he argued, must seek diverse inputs, not echo chambers.

“Most of the tech industry loves to say it’s forward-thinking, but there’s extreme groupthink. Everyone’s two years behind already.”

It’s a reminder that staying ahead requires not just better technology — but better thinking.

Fisher ended by emphasizing the importance of information discipline — the ability to filter, question, and diversify one’s inputs in an era of algorithmic noise. He likened it to reinforcement learning, where founders must balance “exploration and exploitation” to avoid intellectual stagnation.

With users now spending an average of 2 hours 27 minutes daily across 7 platforms, and 60% of tech executives reporting that they rely on social media for industry insights, the danger of algorithmic echo chambers has never been greater. Fisher’s call to think independently aligns with the data: while social media connects billions, its AI-driven feeds increasingly shape professional judgment. For founders, mastering this “information diet” is not optional—it’s a strategic necessity to stay ahead in a landscape where attention is abundant but original thinking is scarce.

The Era of Questions

Jordan Fisher’s talk wasn’t a blueprint for startup success — it was a wake-up call for intellectual humility.

In a time when Generative AI automates creation and Large Language Models simulate expertise, founders’ real edge is not code, capital, or connections — it’s clarity of purpose.

“Founders are the ones who think about everything. Every question. Always. That’s why you're best positioned to navigate this moment.”

As the frontier of intelligence expands, Fisher leaves us with one timeless truth: the future won’t belong to those who have the answers — it will belong to those still brave enough to ask the right questions.

Expert Insight: Key Takeaways for Modern Founders

- Design for Trust: Make “alignment” part of your brand. Users will buy trust before features.

- Build AI-Native Systems: Don’t retrofit; architect your product around Generative AI from day one.

- Solve Hard Problems: Focus on infrastructure, energy, and embodied systems — domains that resist commoditization.

- Stay Intellectually Independent: Curate your inputs as carefully as your datasets.

- Balance Profit with Purpose: The companies that survive AGI will be those that serve society, not just the market.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Where Innovation Meets Alignment

As Jordan Fisher emphasized, the future of AI belongs to founders who build trustworthy, AI-native systems — not just fast products. This is where Makebot bridges the gap. We deliver industry-specific LLM agents and end-to-end Generative AI solutions that turn ethical alignment into measurable business value.

From healthcare and finance to retail and public services, our tools like BotGrade, MagicTalk, and MagicSearch help enterprises move from AI exploration to execution — responsibly and at scale.

Backed by HybridRAG, presented at SIGIR 2025 for achieving a 26.6% accuracy boost and 90% cost reduction, Makebot empowers organizations to lead with trust, transparency, and performance in the AGI era.

👉 Start your AI journey today: www.makebot.ai

📩 Contact us at b2b@makebot.ai to discuss how we can help you lead the AI shift.

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)