Top RAG Tools to Boost Your LLM Workflows in 2025

Top RAG tools in 2025 enhance LLM workflows with better accuracy, less hallucination, and scale.

Top RAG Tools to Boost Your LLM Workflows in 2025

As organizations increasingly integrate artificial intelligence into their operations, the demand for more accurate, reliable, and contextually aware AI solutions has skyrocketed. Retrieval Augmented Generation (RAG) has emerged as a critical approach to enhance the capabilities of Large Language Models (LLMs), addressing key limitations like hallucinations, outdated information, and lack of domain-specific knowledge.

This article explores the top RAG tools that can significantly boost your LLM workflows in 2025, their technical capabilities, and how to select the right solution for your specific requirements.

Top Reasons Why Enterprises Choose RAG Systems in 2025: A Technical Analysis. Read more here!

Understanding RAG and Its Importance in LLM Ecosystems

RAG combines the generative power of LLMs with information retrieval systems to produce more accurate, contextually relevant responses. Unlike traditional Large Language Models that rely solely on their pre-trained knowledge, Retrieval Augmented Generation systems dynamically fetch relevant information from external sources before generating a response.

According to recent industry research, RAG implementations can reduce hallucinations by up to 70% and improve response accuracy by 40-60% compared to using standalone LLMs. Organizations implementing RAG tools in their LLM workflows report significantly higher user satisfaction and trust in AI-generated outputs.

Key Components of Effective RAG Systems

An effective RAG implementation consists of several critical components:

- Document Processing and Chunking: Techniques to divide documents into optimal segments for retrieval

- Embedding Models: Algorithms that convert text into vector representations

- Vector Databases: Systems that store and retrieve embeddings efficiently

- Retrieval Mechanisms: Methods for finding the most relevant information

- Integration Framework: Architecture connecting retrieval results with LLM generation

- Evaluation Metrics: Systems to measure and improve RAG performance

How Retrieval-Augmented Generation (RAG) Supports Healthcare AI Initiatives. More here!

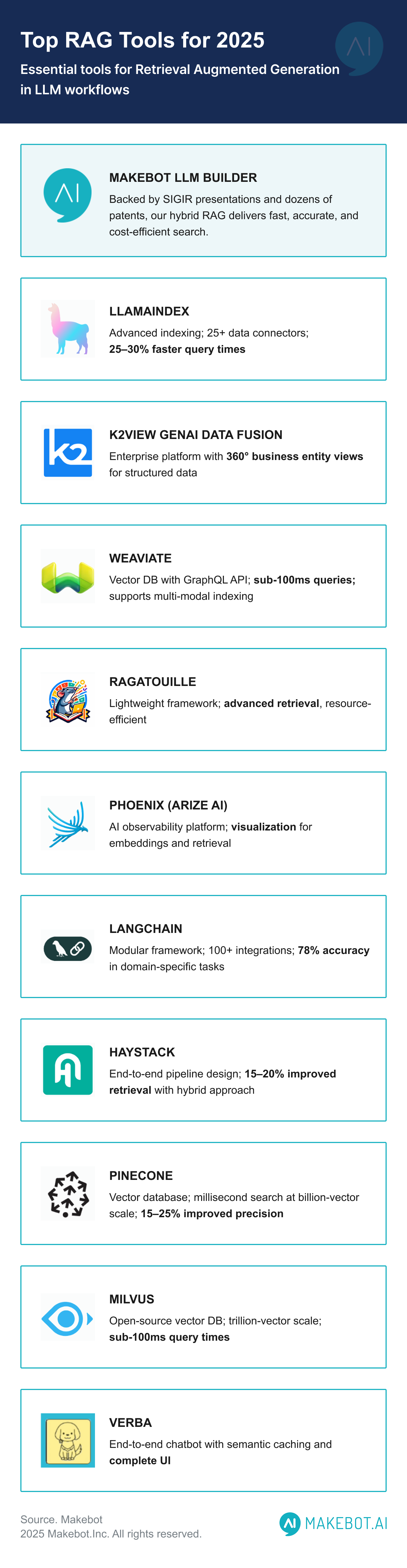

Top RAG Tools for 2025

- Makebot LLM Builder

Technical Specifications:

- Architecture: All-in-one LLM solution with hybrid RAG optimization

- Integration Capabilities: Multi-LLM support (OpenAI, Anthropic, Google, Naver, proprietary models)

- Key Features: hybrid RAG with 100+ patents

- Deployment Options: Cloud, on-premises, enterprise integration

Makebot distinguishes itself as a comprehensive RAG solution provider with patented hybrid RAG technology that minimizes LLM errors while optimizing both performance and cost efficiency. Its LLM Builder platform stands out for seamless integration of multiple LLMs, fine-tuning capabilities, and industry-specific customizations.

The company's advanced file learning system supports all document types (DBMS, PDF, Word, Excel) with streamlined automated learning processes, making it particularly valuable for enterprise deployments requiring comprehensive knowledge integration. Makebot's hybrid RAG implementation has been verified through international-level research and technology validation, resulting in over 100 original technology patents.

What sets Makebot apart is its proven track record with major enterprises including Woongjin Group, Hyundai Motor Company, and Korean Air, demonstrating its ability to handle enterprise-grade RAG implementations at scale with exceptional stability.

- LangChain

Technical Specifications:

- Architecture: Modular, component-based framework

- Integration Capabilities: 100+ integrations with vector stores, document loaders, and LLM providers

- Key Features: Document loaders, text splitters, vector stores, memory components, and chains

- Programming Languages: Python, JavaScript/TypeScript

- Deployment Options: Cloud, on-premises, serverless

LangChain has established itself as one of the most comprehensive RAG tools available, providing a modular framework for building sophisticated LLM workflows. Its strength lies in its extensibility and the ability to chain different components together to create complex applications.

Recent benchmarks show that LangChain-based RAG systems achieve 78% accuracy in domain-specific information retrieval tasks when properly configured. Its LangGraph feature enables the creation of stateful multi-actor applications, adding another dimension to traditional RAG implementations.

- LlamaIndex

Technical Specifications:

- Architecture: Data framework focused on indexing and retrieval

- Integration Capabilities: Native support for 25+ data connectors

- Key Features: Advanced indexing structures (list, tree, keyword table, vector), document readers, query engines

- Programming Languages: Python

- Deployment Options: Cloud, on-premises, serverless via LlamaCloud

LlamaIndex differentiates itself by focusing specifically on data ingestion and retrieval for Large Language Models. Its specialized indexing structures optimize retrieval performance, particularly for hierarchical data. Recent benchmarks indicate that LlamaIndex's vector index achieves 25-30% faster query times compared to basic vector database implementations.

The framework's strength in RAG workflows comes from its simplified API for connecting diverse knowledge sources to LLMs, making it an excellent choice for developers looking to quickly implement Retrieval Augmented Generation without building complex pipelines.

- Haystack

Technical Specifications:

- Architecture: End-to-end framework with modular pipeline design

- Integration Capabilities: OpenAI, Cohere, Anthropic, HuggingFace models

- Key Features: Document stores, retrievers, readers, generators, evaluators

- Programming Languages: Python

- Deployment Options: REST API, Docker, cloud deployment

Haystack is designed as a production-ready framework for RAG applications. It excels in search and question-answering systems that work with large document collections. Its pipeline architecture enables developers to experiment with different retrievers, readers, and generators to optimize LLM workflows.

Haystack's hybrid retrieval approach, combining sparse and dense retrievers, shows a 15-20% improvement in retrieval quality compared to single-method approaches. The framework also includes built-in evaluation tools to measure and enhance RAG system performance.

- K2view GenAI Data Fusion

Technical Specifications:

- Architecture: Enterprise platform with Micro-Database™ technology

- Integration Capabilities: Integration with major enterprise systems (CRM, ERP, billing)

- Key Features: 360° business entity views, dynamic data masking, real-time data access

- Deployment Options: Cloud, on-premises, hybrid

K2view's solution addresses a critical challenge in enterprise RAG implementations: accessing and unifying structured data across multiple systems. By organizing enterprise data into 360° views of individual business entities, it ensures LLM workflows have comprehensive, real-time access to relevant information.

This tool is particularly valuable for organizations that need to combine structured data (from databases) and unstructured data (documents, knowledge bases) in their RAG systems, enabling more personalized and accurate AI responses.

- Pinecone

Technical Specifications:

- Architecture: Cloud-native vector database

- Performance: Millisecond search latency at billion-vector scale

- Key Features: Hybrid search (dense + sparse), metadata filtering, distributed architecture

- Indexing Algorithms: HNSW, IVF, ScaNN

- Deployment Options: Managed cloud service

While not a complete RAG solution, Pinecone is a specialized vector database optimized for similarity search—a critical component in Retrieval Augmented Generation pipelines. Its cloud-native architecture enables scalable, low-latency vector searches across billions of embeddings.

Recent benchmarks show that Pinecone's hybrid search can improve retrieval precision by 15-25% compared to pure dense retrieval, making it an excellent choice for organizations building high-performance RAG components in their LLM workflows.

- Weaviate

Technical Specifications:

- Architecture: Open-source vector database with semantic search capabilities

- Performance: Sub-100ms queries with multi-million vector datasets

- Key Features: GraphQL API, multi-modal indexing, modular architecture

- Indexing Algorithms: HNSW

- Deployment Options: Self-hosted, cloud, Docker, Kubernetes

Weaviate combines vector search with traditional database functionality, making it well-suited for RAG implementations that require complex data relationships. Its GraphQL API provides a flexible query interface, while its modular architecture allows for customization of different components.

Weaviate's multi-modal capabilities enable developers to build RAG systems that work with both text and images, expanding the potential applications of Retrieval Augmented Generation in LLM workflows.

- Milvus

Technical Specifications:

- Architecture: Open-source vector database with cloud-native design

- Performance: Millisecond queries on trillion-vector scale

- Key Features: Scalar filtering, heterogeneous computing, dynamic schema

- Indexing Algorithms: HNSW, IVF, FLAT, ANNOY

- Deployment Options: Self-hosted, Zilliz Cloud (managed service)

Milvus is designed for large-scale vector similarity search, making it suitable for enterprise-grade RAG implementations. Its support for multiple indexing algorithms allows developers to optimize for different trade-offs between speed and accuracy.

In benchmark tests, Milvus shows exceptional performance scaling, maintaining sub-100ms query times even with billions of vectors, making it an excellent choice for organizations building scalable RAG tools into their LLM workflows.

- RAGatouille

Technical Specifications:

- Architecture: Lightweight framework for efficient RAG pipelines

- Key Features: Advanced retrieval techniques, customizable generation, multi-modal support

- Integration Capabilities: OpenAI, Anthropic, HuggingFace models

- Programming Languages: Python

- Deployment Options: Self-hosted, containerized

RAGatouille offers a streamlined approach to building RAG systems, focusing on advanced retrieval techniques to improve contextual understanding. Its lightweight design makes it suitable for developers who need more control over their RAG implementation without the overhead of larger frameworks.

The framework's strength lies in its flexibility and optimization for resource-efficient RAG applications, particularly valuable for organizations with compute constraints.

- Verba

Technical Specifications:

- Architecture: Open-source RAG chatbot powered by Weaviate

- Key Features: End-to-end user interface, semantic caching, filtering, hybrid search

- Integration Capabilities: OpenAI, Cohere, HuggingFace, Anthropic

- Deployment Options: Local, cloud, containerized

Verba provides an end-to-end solution for building RAG-powered chatbots, simplifying the process of exploring datasets and extracting insights. Its semantic caching accelerates queries by reusing previous search results, improving response times in LLM workflows.

What makes Verba stand out is its comprehensive user interface that handles everything from data ingestion to query resolution, making it accessible to teams with varying technical expertise.

- Phoenix by Arize AI

Technical Specifications:

- Architecture: Open-source AI observability and evaluation platform

- Key Features: LLM Traces, LLM Evals, embedding analysis, RAG analysis

- Integration Capabilities: LangChain, LlamaIndex, Haystack, DSPy

- Programming Languages: Python

- Deployment Options: Self-hosted, containerized

Phoenix focuses on a critical aspect of RAG implementation that many other tools neglect: evaluation and observability. By providing detailed insights into how RAG pipelines are performing, Phoenix helps developers identify and resolve issues that impact response quality.

The platform's visualization capabilities for embedding spaces and retrieval effectiveness make it an invaluable companion tool for organizations implementing RAG in production LLM workflows.

The Ultimate Guide to RAG vs Fine-Tuning: Choosing the Right Method for Your LLM. Read here!

Technical Benchmarking: Embedding Models and Chunk Sizes

Recent benchmark studies have highlighted the impact of embedding models and chunk sizes on RAG performance.

Among embedding models tested with 10 different LLMs, Google's Gemini embedding achieved the highest average accuracy (82%), followed by OpenAI's text-embedding-3-large (78%) and text-embedding-3-small (75%), with Mistral-embed showing the lowest performance (66%).

For chunk sizes, testing across multiple LLMs indicated that a chunk size of 512 tokens generally delivered the best performance (82% average success rate), compared to 256 tokens (75%) and 1024 tokens (78%). These findings emphasize the importance of tuning these parameters in RAG implementations to optimize retrieval accuracy.

RAG vs. Long Context Windows: Performance Insights

An important technical consideration when implementing RAG tools is whether they outperform the expanding context windows of modern LLMs. Recent benchmarks comparing Llama 4 Scout using RAG versus its native long context window are revealing:

- Llama 4 with RAG: 78% accuracy

- Llama 4 with long context window only: 66% accuracy

This 12% performance gap demonstrates that despite increasing context lengths, Retrieval Augmented Generation continues to provide substantial benefits by focusing on high-quality, contextually relevant data rather than processing entire lengthy contexts.

Selecting the Right RAG Tool for Your Needs

When selecting RAG tools for your LLM workflows, consider the following factors:

- Scale Requirements: Consider the volume of data and queries your system will handle.

- Data Types: Assess whether you need to process primarily unstructured documents, structured data, or both.

- Integration Needs: Evaluate compatibility with your existing systems and preferred LLM providers.

- Technical Expertise: Match tool complexity with your team's capabilities.

- Deployment Environment: Determine whether cloud, on-premises, or hybrid deployment best suits your needs.

- Performance Metrics: Identify which aspects of performance (accuracy, latency, throughput) are most critical.

- Budget Constraints: Balance capabilities with cost considerations.

Implementation Challenges and Best Practices

Implementing RAG in production LLM workflows presents several challenges:

- Data Quality Issues: Low-quality or outdated documents can degrade RAG performance. Implement rigorous data curation processes and regular updates to knowledge bases.

- Chunking Strategy Complexity: Finding the optimal chunking approach requires balancing context preservation and retrieval precision. Test different chunk sizes and overlapping strategies based on your specific content.

- Tuning Requirements: RAG systems require substantial tuning across multiple components. Implement systematic testing of embedding models, retrievers, and prompt templates.

- Performance Bottlenecks: Without proper optimization, RAG can introduce significant latency. Implement caching strategies and optimize infrastructure for vector operations.

- Prompt Engineering Dependencies: Even with excellent retrieval, RAG systems depend on effective prompt design. Develop templates that properly instruct the LLM on how to use retrieved information.

Conclusion

As we move through 2025, Retrieval Augmented Generation continues to be a transformative approach for enhancing Large Language Model capabilities. The diverse ecosystem of RAG tools provides options for organizations at various stages of AI maturity, from experimental prototypes to enterprise-scale deployments.

By carefully selecting and implementing the right RAG tools for your specific LLM workflows, you can significantly improve the accuracy, relevance, and trustworthiness of AI-generated outputs while leveraging your organization's unique knowledge assets. Whether you're building customer-facing chatbots, internal knowledge systems, or specialized research tools, RAG offers a powerful framework for making LLMs more valuable and reliable in real-world applications.

Boost Your LLM Workflows with Makebot’s Advanced RAG Solutions

Elevate your AI with Makebot’s cutting-edge Retrieval Augmented Generation technology, custom-built chatbots, and seamless multi-LLM integration. Experience higher accuracy, reduced hallucinations, and tailored workflows designed for your industry.

Get started today — Contact Makebot for your RAG-powered AI transformation!

.jpg)

.png)

_2.png)

.jpg)