Beyond the Build: Uncovering the Hidden Costs of In-House LLM Chatbot Development

AI is reshaping healthcare and enterprise systems with real ROI, lower costs, and smarter scaling.

Enterprises rarely set out to build an expensive system. They set out to build a custom one.

But as global adoption of Generative AI rises—86% of enterprises say AI will be a “mainstream technology” in 2025 —so does the misunderstanding of what it truly costs to build and maintain internal AI systems. Most leaders assume the main expense is the initial development. In reality, the true cost of an LLM Chatbot emerges slowly, buried inside infrastructure, compliance, data readiness, and constant re-engineering.

According to industry data, 70–85% of AI projects fail, and one of the biggest drivers is the cost explosion after deployment—not before.

This article breaks down those hidden costs and risks with a clear, research-driven narrative—helping enterprise leaders make better decisions before committing to internal AI Development.

Why Most Enterprise Chatbot Projects Fail Before They Begin. Read more here!

The Illusion of Predictable AI Development Costs

Most enterprises begin with clear budgeting assumptions: hire a few engineers, fine-tune a model, and integrate it with existing systems. But AI In Enterprise doesn’t follow the same rules as traditional software development.

Industry data shows that LLM-powered chatbots routinely exceed initial cost forecasts because the work is experimental, not linear. Models behave unpredictably, data changes, and infrastructure grows with every new feature.

It was found out that even “simple” AI projects accumulate new expenses over time—prompt tuning, QA validation, API overages, and integration rework—each of which adds 15–25% onto the original build. This unpredictability is why many organizations underestimate:

- How long it takes to achieve production-level accuracy

- How frequently models must be retrained or updated

- How scaling user demand multiplies API and token costs

- How rapidly LLM environments evolve, forcing upgrades

The result: an internal AI project whose maintenance cost quietly balloons while leadership still believes it is “already built.”

Hidden Infrastructure Costs: Cloud, Compute, and Token Consumption

Most businesses dramatically miscalculate ongoing LLM inference costs. A single user query to an LLM rarely involves a single model call. In real enterprise deployments, that query may trigger:

- A classification step

- A retrieval step

- Multiple reasoning steps

- A generation step

What looked inexpensive at prototype scale becomes overwhelmingly costly in production—especially during seasonal peaks, marketing campaigns, or high-traffic events.

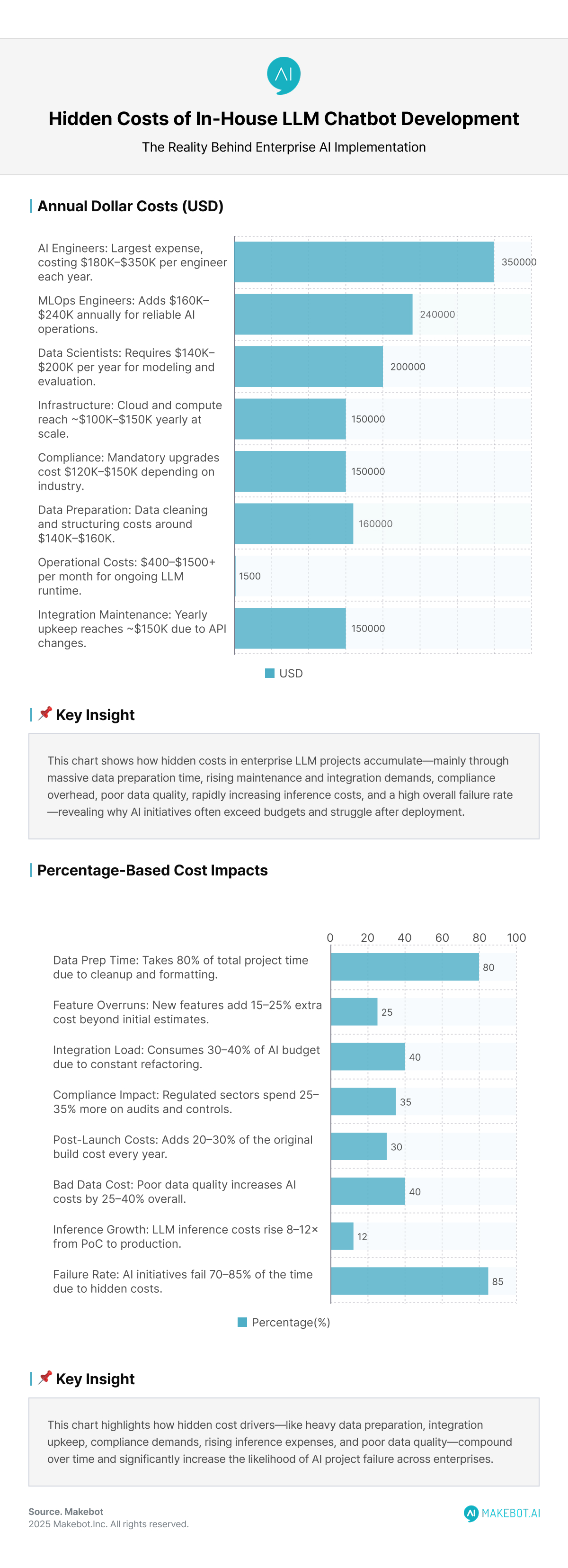

According to industry data:

- AI chatbots cost $400–$1,500 per month just in operational expenses at moderate scale.

- High-volume enterprise workloads easily exceed $2,000+ per month in token usage, storage, and hosting.

- LLM inference costs increase 8–12x when moving from PoC to production.

- 27% of companies cite “runaway cloud costs” as their biggest AI challenge.

Cloud computing becomes another silent budget sink. Enterprises must eventually invest in:

- GPU-accelerated servers

- Vector database scaling

- Low-latency load balancers

- High-availability disaster recovery

And none of this is optional—LLM Chatbot reliability depends on it. This is where Makebot’s architectural advantage becomes clear. By shifting most computational load offline through HybridRAG, Makebot decreases runtime LLM calls—dramatically reducing long-term cost exposure.

The Talent Burden: Why Internal Teams Become a Cost Spiral

The cost of an internal AI team doesn’t stop at salaries. Building an enterprise-grade chatbot requires a multidisciplinary workforce:

- LLM engineers

- Data scientists

- Data engineers

- DevOps/MLOps

- Security and compliance experts

- Domain SMEs for QA validation

These roles are expensive and difficult to retain, particularly as demand for Large Language Models expertise outpaces supply. Talent shortages alone delay project timelines by months. And once the team is built, the financial commitments continue indefinitely:

- Continuous upskilling

- Keeping pace with new model architectures

- Refactoring pipelines for emerging frameworks

- Monitoring drift and retraining models

- Updating integrations as APIs evolve

- Compliance re-certifications

According to global salary benchmarks:

- AI engineers cost $180K–$350K annually in the U.S.

- MLOps engineers average $160K–$240K.

- Data scientists average $140K–$200K, depending on seniority.

AI is not one project—it is a permanent organizational function. This is why many enterprises quietly fail mid-way: budgets were allocated for a single build, not a permanent AI operations program. This is why 57% of enterprises now outsource AI maintenance even when they built the system internally.

McKinsey Report: How Generative AI is Reshaping Global Productivity and the Future of Work. Read here!

Compliance: The Most Expensive Blind Spot

Regulatory costs are rarely included in initial chatbot budgets, but they are unavoidable—especially in finance, healthcare, government, and large-scale consumer operations. Across industries:

- HIPAA violations can cost up to $1.5M per incident.

- Financial-service chatbots require 25–35% more budget due to audit requirements.

Compliance requirements often include:

- End-to-end encryption

- Role-based access control

- Secure data routing

- Audit logs

- Data minimization

- Traceability

- Model output verification

One healthcare startup spent $120,000 solely on compliance upgrades after deployment. Another financial firm required $150,000 to secure approval for LLM-based automation. Compliance is not optional—and in LLM systems, it becomes an ever-expanding cost center.

Makebot mitigates this risk by embedding regulatory safeguards directly into its enterprise stack—AI governance, audit logs, confidence-based human review, and domain-specific sLLMs—reducing the compliance burden on internal teams.

Integration: The Hidden Technical Debt That Never Ends

An LLM Chatbot is only valuable when deeply integrated with CRMs, ERPs, HRIS systems, authentication layers, and internal data sources.

Industry data shows:

- Maintaining enterprise integrations consumes 30–40% of total AI budget.

- API version changes occur every 3–12 months, requiring continuous refactoring.

- Enterprises report losing 2–4 weeks during every major CRM/API update.

But each integration brings:

- API rate-limit risk

- Version mismatches

- Error handling

- Data schema conflicts

- Authentication upgrades

- Dependency on third-party updates

One mid-sized brand spent $25,000+ in unplanned integration fixes over 18 months—costs that were not part of the original quote. Enterprises underestimate that integrations must be constantly maintained. Cloud platforms change endpoints. Vendors update authentication rules. Internal systems migrate.

The integration never stays “stable”—it must evolve.

Makebot avoids this burden through ready-to-deploy platforms (BotGrade, MagicTalk, MagicSearch, MagicVoice) that abstract integration complexity and offer low-code connectors built for long-term reliability.

Data Preparation: The Largest Invisible Cost Driver

Enterprises consistently underestimate how much time and budget data preparation consumes.

Industry benchmarks show:

- 80% of AI project time is spent cleaning and preparing data.

- Poor data quality increases AI project cost by 25–40%.

- Unstructured PDFs, scanned documents, and tables increase prep cost by 2–5x.

Across industries, 50–80% of AI project time is spent on:

- Data cleaning

- Metadata enrichment

- Document normalization

- Schema alignment

- OCR correction (especially for PDFs and scans)

- Labeling and QA

The attachment reinforces this: training data cleanup and rework is one of the most expensive—and least anticipated—costs in chatbot development. For enterprise chatbots using RAG, this becomes even more resource-intensive because:

- Unstructured documents are inconsistent

- PDFs contain tables and mixed layouts

- Policies change frequently

- Organizational knowledge evolves

- Retraining cycles never end

This is exactly the pain point Makebot’s HybridRAG was designed to solve. With automated layout parsing, hierarchical chunking, and pre-generated QA knowledge bases, Makebot cuts both data preparation and runtime cost dramatically—validated by its 26.6% F1 improvement and global recognition at SIGIR 2025.

Post-Launch Drift, Monitoring, and Continuous Optimization

AI systems degrade—sometimes quickly. According to industry reports:

- Models begin drifting within 30–90 days of deployment.

- 74% of enterprises underestimate post-launch AI maintenance needs.

- Retraining cycles add 15–25% of the original project cost annually.

Once deployed, enterprise chatbots face:

- Model drift

- Outdated organizational knowledge

- Business rule changes

- Product catalog updates

- New customer intent patterns

- Regulatory shifts

These changes require:

- New prompt strategies

- Updated QA datasets

- Re-indexing vector stores

- Re-finetuning models

- Manual quality checks

- Continuous UX improvements

Each iteration cycle adds 15–25% of the original development cost. An in-house team must maintain ongoing pipelines, automate tests, and respond to unexpected drops in answer accuracy—tasks that add further operational burden.

Makebot addresses this through automated MLOps workflows, real-time monitoring dashboards, and industry-specific optimization cycles designed for stable long-term performance.

Vendor Lock-In vs. Internal Lock-In: Both Are Expensive

Many enterprises choose in-house development to avoid vendor dependency. Ironically, they introduce a different kind of lock-in:

Internal lock-in, caused by:

- Custom toolchains

- Engineer-specific knowledge

- Proprietary pipelines that only one person understands

- Custom scripts built around older model architectures

- Integration logic tied to legacy databases

- Lack of documentation as projects grow

Replacing or losing a key engineer can mean rebuilding entire modules from scratch.

Vendor lock-in is a risk—but internal lock-in can be costlier, slower to fix, and harder to escape.

Makebot reduces both risks by offering standardized, modular frameworks and domain-specific LLM agents that can integrate with existing enterprise systems without forcing irreversible architectural decisions.

Why Makebot’s Architecture Cuts These Hidden Costs

Makebot was built specifically for the challenges enterprises face when trying to build an LLM Chatbot from scratch. Here’s how Makebot mitigates the exact risks outlined above:

1. Cost Efficiency Through HybridRAG

- Offline QA generation reduces runtime compute

- Pre-generated semantic knowledge eliminates 80%+ of LLM calls

- Verified to reduce cost by up to 90% at scale

2. Domain-Specific LLM Agents

- Healthcare, finance, retail, logistics, government

- Used by leading institutions like Seoul National University Hospital

3. Ready-to-Deploy Enterprise Platforms

- BotGrade, MagicTalk, MagicSearch, MagicVoice

- Reduce integration complexity and upkeep

4. Global Recognition & Proven Results

- 26.6% F1 accuracy improvement showcased at SIGIR 2025

- 130,000 QA pairs generated across 7 domains for just $24.50

- Backed by LLM/RAG patents and deployed across 1,000+ enterprises

Makebot doesn’t just deliver technology—it delivers architecture, governance, and cost predictability, the three factors enterprises consistently underestimate.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

The True Cost of In-House LLM Chatbots Is Higher Than It Appears

Building internally gives you control—but it also gives you responsibility for every cost, every risk, and every failure point.

The data is clear:

- Infrastructure scales unpredictably

- Talent shortages slow progress

- Compliance becomes costly under scrutiny

- Integrations require constant upkeep

- Data preparation consumes the bulk of time

- Monitoring, drift, and retraining generate continuous expense

- Total cost of ownership increases 30–50% beyond initial estimates

Enterprises are not failing due to lack of Generative AI innovation—they are failing because they underestimated the complexity of AI Development at scale.

Makebot bridges that gap. With patented RAG technology, domain-specific agents, and globally recognized AI research, Makebot transforms enterprise ambition into operational reality—reducing cost, accelerating deployment, and ensuring long-term sustainability.

👉 Start your AI transformation today at www.makebot.ai or contact b2b@makebot.ai to explore how Makebot can help your organization lead the next era of AI in Enterprise.

.jpg)

.png)

_2.png)

.jpg)