The Future of AI in Healthcare: Insights from Former CDC Director Dr. Rochelle Walensky

AI is reshaping healthcare and enterprise performance with real ROI, smart scaling, and trust.

At Future of Health’s invitation-only event, former CDC director Dr. Rochelle Walensky sat down with MobiHealthNews to discuss the potential and challenges of AI in healthcare. Read the full interview here.

As the global healthcare ecosystem accelerates its adoption of bold Generative AI, no voice is more valuable than that of someone who has led public health through a global crisis. At the Future of Health (FOH) annual summit—an invitation-only event gathering more than 50 influential leaders across health systems, academia, government, and technology—former CDC Director Dr. Rochelle Walensky provided a sharp, grounded assessment of where AI in Healthcare is truly heading.

Her perspective: AI will transform care delivery, diagnostics, and system efficiency, but only if healthcare leaders confront bias, redefine clinician training, and protect the central role of the patient.

How Claude AI Is Transforming Clinical Research and Healthcare Innovation. Read more here!

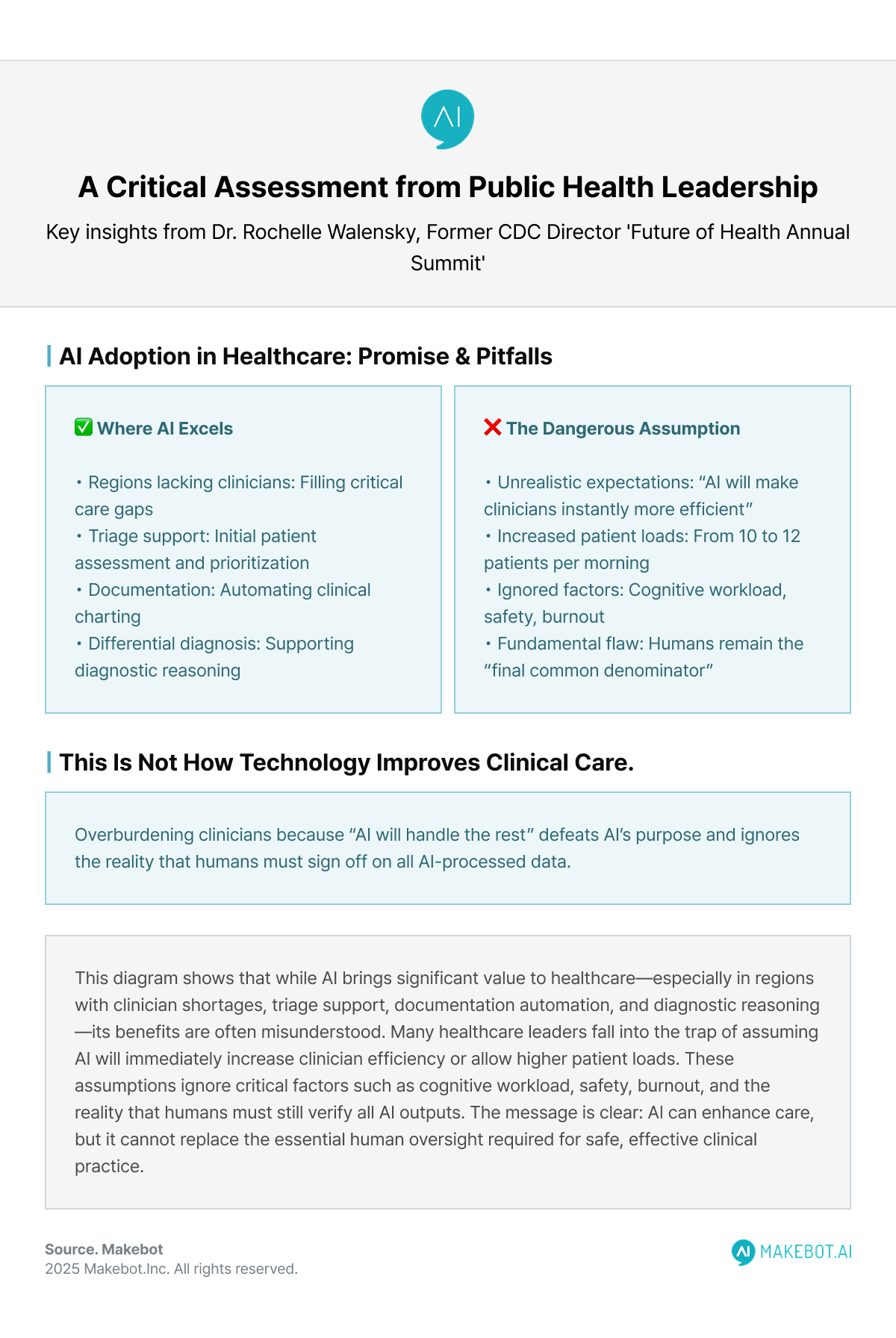

AI Adoption in Healthcare

Dr. Walensky emphasized that AI’s value is undeniable—especially in regions lacking clinicians. In places "where there's an absence of physicians,” she noted, AI is already filling care gaps, supporting triage, documentation, and differential diagnosis. These applications showcase precisely why global health systems are accelerating AI Development.

But her optimism comes with a warning: unrealistic expectations are being placed on clinicians. AI promises to automate charting and documentation, yet many healthcare leaders interpret this as an opportunity to simply increase patient loads, pushing physicians from “10 to 12 patients per morning because AI will handle the rest.”

Her message is blunt: this is not how technology improves clinical care.

When humans remain the “final common denominator”—the last to sign off on AI-processed data—overburdening them defeats AI’s purpose. The expectation that clinicians will instantly become more efficient simply because AI exists ignores cognitive workload, safety, and burnout.

This sets the stage for the next question: if AI will take on documentation and triage, how should we train clinicians to thrive?

A Call to Rethink Medical Training in the AI Era

One of Dr. Walensky’s most urgent points concern the future of Medical Education. Current medical training, she argued, does not reflect the realities of a future where AI automates chart reviews, clinical summaries, or documentation.

Her questions go straight to the heart of healthcare workforce planning:

- What skills should future physicians focus on when AI handles administrative burdens?

- How should universities redesign competencies for diagnosis, reasoning, and data interpretation?

- If AI reshapes clinical practice within the next five years, why are medical schools still teaching for a pre-AI world?

This is a critical gap. Without updating training frameworks, healthcare systems risk a generation of clinicians unprepared for an AI-augmented environment—one where judgment, context, and ethical oversight matter more than ever.

That rethinking also intersects deeply with equity and bias—another domain where AI may silently propagate harm unless we actively intervene.

Bias in AI: The Problem Nobody Is Talking About Anymore

Perhaps the sharpest critique Dr. Walensky's offer was aimed at the fading public conversation around AI bias. “It totally stopped,” she said—a concerning observation given AI’s expanding influence in decision-making.

She highlighted a particularly harmful pattern: underserved communities often appear “low-utilization” in datasets simply because they lack access to care. AI systems can misinterpret this as “lower medical need,” reinforcing inequities. This feedback loop, if left unchecked, can deeply distort care recommendations and exacerbate disparities.

Her message is clear: AI does not solve bias—humans do. Clinicians must remain the interpretive layer that corrects AI’s blind spots, especially in nuanced diagnoses such as distinguishing pneumonia from congestive heart failure.

That brings us to another critical lever: orientation toward the patient, not the margin.

Keeping Patients at the Center

When asked how healthcare technology companies can balance innovation with patient needs, Dr. Walensky insisted on a patient-first philosophy.

Healthcare consumes 18% of U.S. GDP, yet its outcomes lag behind peer nations. If AI companies prioritize margin over mission, she warned, the industry will continue to optimize profit rather than improve care.

But she also offered a hopeful counterpoint: companies that deliver meaningful patient improvement may ultimately be the ones that increase revenue. Trust, performance, and health outcomes drive long-term adoption. This aligns with the broader industry movement toward value-based care, where Healthcare Technology solutions are incentivized for impact rather than volume.

This mission-first approach is vital because the next section shows that the technologies emerging now are pushing far beyond traditional care—and require systems thinking.

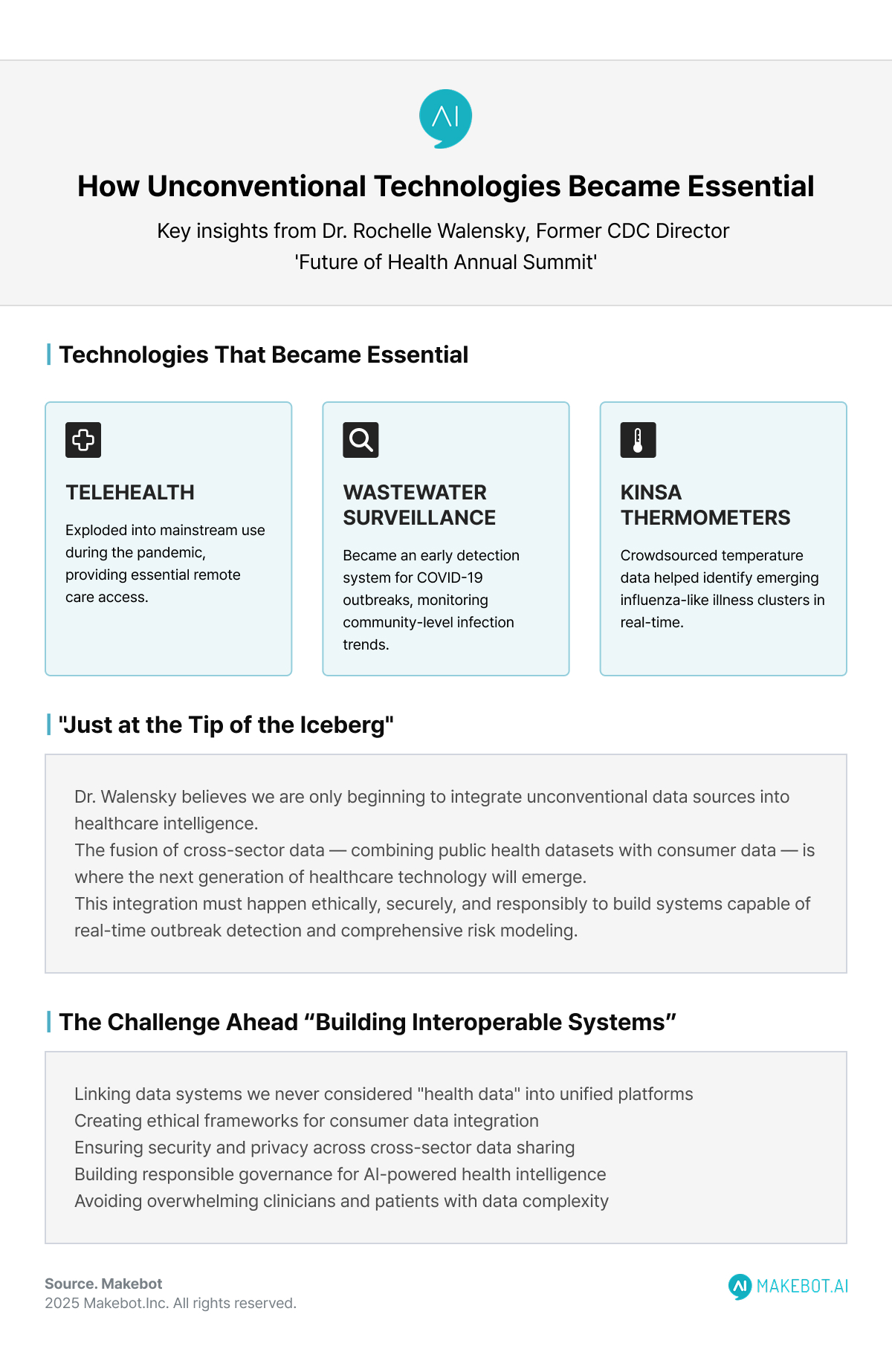

Lessons from the Pandemic

Dr. Walensky’s reflections on the COVID-19 pandemic underscored how quickly unconventional technologies became essential tools:

- Telehealth exploded into mainstream use—but has lost momentum due to shifting policy.

- Wastewater surveillance became an early detection system for outbreaks.

- Kinsa thermometers, which crowdsource temperature data, helped identify emerging influenza-like illness clusters.

But her most fascinating examples came from global innovation:

- In Vietnam, clinicians used AI to read chest X-rays for tuberculosis screening in school clinics.

- AI-based models tracked Legionella outbreaks by analyzing cooling tower locations.

- Even OpenTable reservation patterns became an epidemiological signal—as she explained, “people do not go out if they are not feeling well.”

These examples demonstrate how non-traditional datasets—restaurants, travel, social behavior—may be the next frontier of AI in Healthcare. Health data no longer exists in silos.The challenge ahead: linking data systems we never considered “health data” into interoperable, ethical platforms—without overwhelming clinicians or patients.

KPMG: AI's Extensive Adoption in Healthcare. Read here!

Linking Systems We Never Considered “Health Data”

Dr. Walensky believes we are “just at the tip of the iceberg” in integrating unconventional data sources. Airports, reservation platforms, population-level sensors, and environmental monitoring tools could all contribute to real-time outbreak detection and risk modeling.

The challenge now is building interoperable systems capable of merging public health data with consumer data—ethically, securely, and responsibly. This fusion of cross-sector data is where the next generation of Healthcare Technology will emerge.

But as Dr. Walensky makes clear, the value does not come from scale alone—it comes from thoughtfully building those pipelines and ensuring the human finger remains on the pulse.

Conclusion

Dr. Rochelle Walensky’s perspective is a powerful reminder: AI will not transform healthcare unless leaders intentionally redesign systems, training, and policy.

Success depends on three pillars:

- Empowering clinicians, not overwhelming them

- Updating Medical Education to match the realities of AI-driven practice

- Confronting bias and restoring the central role of human judgment

The future of Generative AI and AI in Healthcare is neither utopian nor dystopian—it is entirely dependent on how we choose to build, govern, and deploy it.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Makebot: Human-Centered AI for the Next Era of Healthcare

As Dr. Walensky stressed, the future of AI in healthcare demands tools that reduce clinician burden, strengthen decision-making, and keep patients at the center. This is exactly where Makebot bridges the gap. Our industry-specific LLM agents—already used by leading hospitals such as Seoul National University Hospital and Gangnam Severance Hospital—help healthcare organizations deploy safe, efficient, and bias-aware AI that truly supports clinical teams. Whether upgrading your chatbot with BotGrade, enhancing patient engagement through MagicTalk, or processing complex medical data with MagicSearch, Makebot provides practical, ready-to-deploy AI solutions that align with real clinical needs.

Our globally verified platforms, including HybridRAG—presented at SIGIR 2025 after achieving a 26.6% accuracy improvement and up to 90% cost reduction—ensure enterprises can move quickly from PoC to real-world impact. Backed by multiple LLM/RAG patents and trusted by over 1,000+ organizations, Makebot enables healthcare systems to transform Generative AI from a promising idea into measurable clinical outcomes and sustainable efficiency gains.

👉 Start your AI journey today: www.makebot.ai

📩 Contact us at b2b@makebot.ai to discuss how we can help you lead the AI shift.

.jpg)

.png)

_2.png)

.jpg)