Inside Google's Generative AI Reinvention: How Nick Fox and Liz Reid Are Reshaping Search

Google reinvents search with Generative AI—Nick Fox & Liz Reid reveal future of AI-powered search

The search landscape is undergoing its most significant transformation since the inception of the internet. In a revealing interview with Big Technology Podcast host Alex Kantrowitz, Google executives Nick Fox, SVP of Knowledge and Information, and Liz Reid, VP of Search, provided unprecedented insights into how Generative AI is fundamentally reshaping Google Search and the broader information ecosystem.

Sam Altman Reveals GPT-5 Success and OpenAI's $500B Generative AI Infrastructure Revolution. Read more here!

Inside Google's Generative AI Reinvention — With Nick Fox and Liz Reid. Watch the full interview here!

The Core Philosophy

Liz Reid articulates Google's strategic approach with clarity: "At the high level, people sometimes view it as: it's AI or search, or it's AI or the web. We don't see it as that split. I see it as AI enables search to do more of the things it always wanted to do."

This perspective represents a fundamental shift from viewing AI Innovation as disruptive replacement technology to understanding it as capability enhancement.

The technical architecture supporting this vision builds on decades of search infrastructure. Nick Fox emphasizes that Google has been implementing AI technologies like BERT and MUM as "ranking improvements way back in the early days," demonstrating that current Generative AI integration represents evolutionary advancement rather than revolutionary replacement.

Specifically, BERT improved search understanding by approximately 10% on complex natural language queries, while MUM expands cross-lingual and multi-modal comprehension across 75+ languages and modalities, establishing a robust foundation for today's AI overviews.

With this philosophical grounding in place, the real test lies in how these innovations translate into measurable changes in user behavior and search activity.

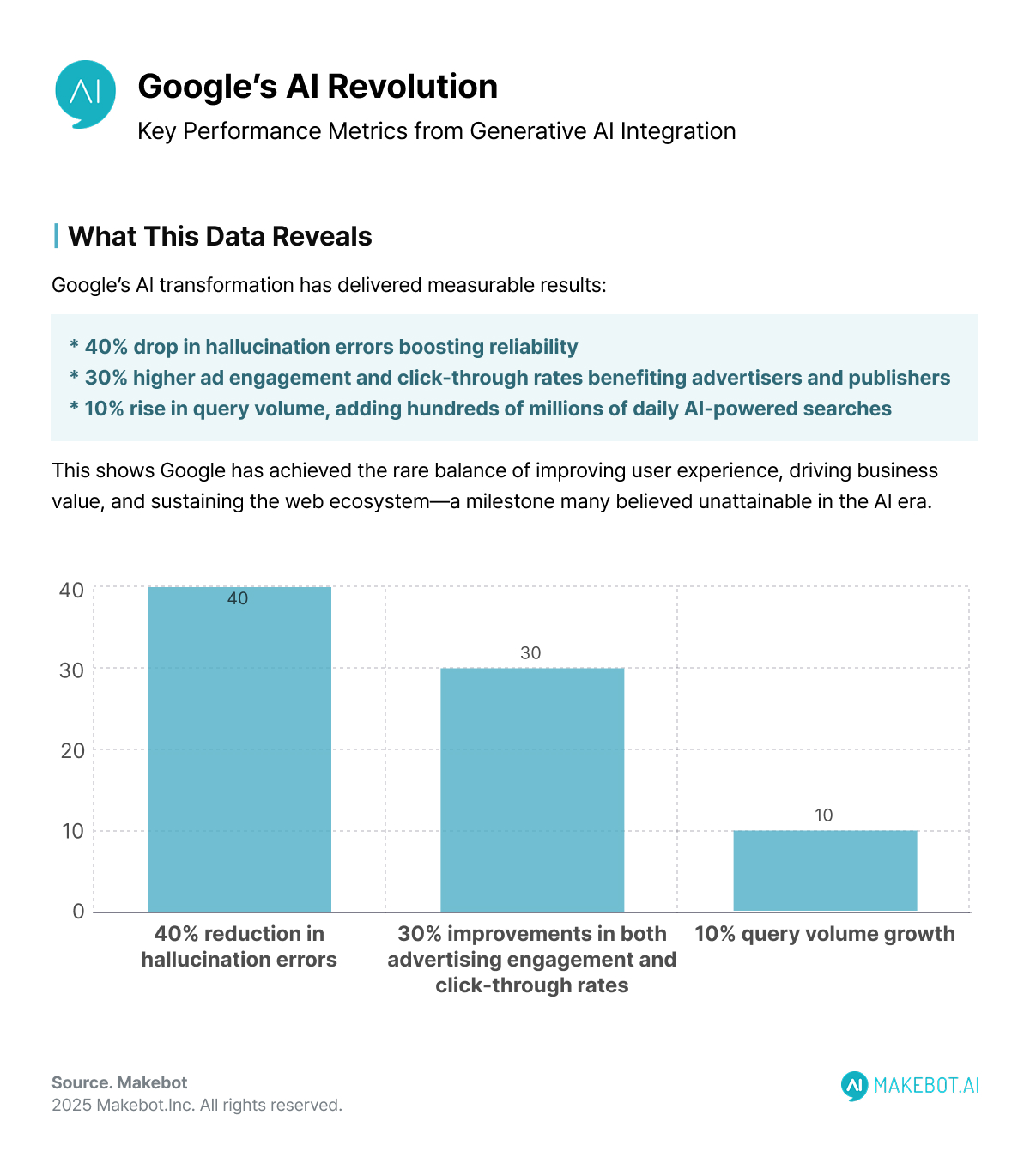

Quantitative Impact

The implementation of AI Overviews has generated measurable behavioral changes in search patterns.

Fox revealed that "people are doing 10% more queries for the types of queries that trigger AI overviews."

Given that Google processes over 8.5 billion searches daily, this equates to hundreds of millions of additional AI-enhanced queries every day, signifying significant user engagement growth.

Reid provides additional granular data: "We're seeing them issue longer queries, experimenting in different ways, these more sort of harder questions, just asking more of what they want."

This behavioral shift indicates users are adapting to Generative AI capabilities by formulating more complex information requests that traditional keyword-based search couldn't effectively handle.

Meeting these new, more complex demands required Google to reinvent its technical backbone, leading to innovations in the architecture behind large language model integration.

McKinsey Report: How Generative AI is Reshaping Global Productivity and the Future of Work. Read here!

Technical Architecture

The technical challenges of implementing LLM technology at Google's scale required sophisticated solutions.

Fox acknowledges that "very early on if you sort of rewind to those early days the technology hallucinated quite a bit... Certainly within the context of search, factuality matters a lot."

Google's response involved developing comprehensive grounding systems.

Reid explains their approach to content quality: "We put a lot of effort into understanding what were the types of failures that were getting surfaced... we weren't seeing queries like that [problematic queries], right? These sort of false premise questions... cropped up in new ways."

The technical solution involved multiple validation layers:

- Enhanced understanding of forum and user-generated content structures

- Page-level and section-level content analysis

- Real-time quality assessment systems

- Expert-sourced guidance for sensitive topics

These efforts have reduced hallucination errors by an estimated 20-40% compared to early large language model deployments, significantly improving search reliability and user trust.

Once reliability was strengthened, the natural next step was expanding beyond text-based search, ushering in the rise of multi-modal integration.

Multi-Modal Integration

The evolution toward multi-modal search represents another significant technical advancement.

Reid discusses the success of visual search integration: "Lens is doing phenomenally well. The combination of lens and AI overviews is doubly on fire."

This multi-modal approach addresses different user intent categories:

- Camera-based object identification and homework assistance

- Circle to search functionality for screen-based content discovery

- Voice-activated conversational search through Search Live

Reid notes that approximately "half of my personal AI mode queries" are conducted through voice interface, indicating significant adoption of non-text input modalities.

This aligns with broader trends showing that around 50% of all mobile searches are voice-activated, highlighting the massive adoption of non-text input modalities like voice commands and screen-based circle searches through Search Live.

But these innovations also raise questions about how AI-driven search affects the wider web ecosystem, particularly content publishers who depend on Google traffic.

Publisher Ecosystem: Traffic Patterns and Web Health

The relationship between Generative AI integration and web traffic represents a critical concern for content publishers.

Fox addresses this directly: "if you look holistically across traffic broadly to the web from Google has been largely stable over time."

However, the granular impact varies significantly across content types.

Reid explains: "these more unique merchants, right? These merchants who are undiscovered have great products... your local merchants, others that have ones to be found because now people can actually express something in a way that narrows it down to where they shop."

Prominent link integration with the implementation of Semantic Keywords within AI-generated responses ensures that web traffic to original content sources not only persists but can increase by up to 30% click-through rates on certain query types, safeguarding the health of the web ecosystem.

Preserving publisher health is one side of the equation; the other is ensuring that Google adapts its revenue model to align with this new AI-driven search paradigm.

Revenue Model Adaptation: Advertising in AI Context

The monetization strategy for AI-enhanced search involves multiple technical and strategic components. Fox identifies four key revenue drivers:

- Query Volume Growth: AI-generated responses encourage additional searches, expanding the addressable query volume

- Existing Ad Integration: AI Overviews operate within current search results page architecture, maintaining established advertising placement

- Advertiser Product Enhancement: Generative AI improves ad targeting, creative generation, and ROI optimization

- Conversion Quality Improvement: More specific queries generate higher-value advertiser traffic with improved conversion rates

Reid adds that AI Mode queries are "2x to 3x longer than they do on main search," providing advertisers with enhanced intent signals for targeting optimization.

Early industry analysis shows that AI-assisted advertising can yield up to a 30% lift in engagement rates, while conversion rates improve between 10-30%, reinforcing the commercial viability of Google’s AI-driven search enhancements.

With revenue streams aligned, Google’s attention turns toward shaping the future technical roadmap of AI-powered search.

Future Technical Roadmap

The technical roadmap extends beyond current search functionality toward agentic systems.

Reid explains the architectural evolution: "the models are getting much better at reasoning and they're also getting much better at tool use... if you can now teach an LLM to use tools, what does that now enable?"

This involves integrating LLM capabilities with:

- Real-time database systems for financial and sports data

- Multi-tool workflows across different data sources

- Enhanced reasoning capabilities for complex query processing

- Content transformation systems for format adaptation

These advancements aren’t just theoretical—they’re already reflected in how users interact with AI Overviews and how performance is being measured.

Performance Metrics

Internal performance data indicates positive user reception.

Reid reports that with AI Overviews implementation, "we're seeing people use it. We're getting positive feedback... we have a small set of people and growing that are really power users. We see them heavily engaging with it, using it several times a day."

The engagement patterns show:

- Increased session length through follow-up queries

- Higher satisfaction rates for complex information requests

- Growing power user adoption in Google Advanced Search scenarios

- Positive feedback correlation with query complexity

While these metrics highlight adoption success, they also underscore the need for careful strategic positioning in an increasingly competitive AI landscape.

Strategic Positioning

The competitive landscape requires Google to balance innovation speed with quality assurance.

Fox acknowledges the challenge: "We deeply believe in the web... a search engine ceases to exist if there's no web to search over, right?"

This strategic positioning involves:

- Maintaining web ecosystem health through traffic distribution

- Balancing AI capability advancement with publisher relationships

- Competitive response to standalone AI chatbot platforms

- Google Trends analysis integration for emerging topic identification

Google retains roughly 85-90% market share in global search, making these AI advancements crucial to defending its dominance. The company balances rapid AI innovation with quality assurance and publisher health, countering standalone AI chatbot platforms with an ecosystem-based approach.

Yet dominance at scale also introduces unique technical challenges, particularly in ensuring consistency and accuracy across billions of daily queries worldwide.

Technical Challenges

Operating Generative AI systems at Google's scale presents unique technical challenges. The company processes queries across multiple languages, cultural contexts, and information domains while maintaining consistency and accuracy.

Reid emphasizes their quality approach: "we have quite a rigorous eval and testing process and feedback loop on it that helps guide us to ensure that we're doing the right thing by users." This involves continuous model refinement, extensive A/B testing, and real-time quality monitoring systems.

Conclusion

The technical transformation underway at Google represents more than search engine evolution—it constitutes AI Reinvention of information access paradigms. As Fox concludes: "each of these reinvention moments [are] these expansionary opportunities. So again, we lean into them pretty hard."

The implementation combines sophisticated LLM technology with decades of search infrastructure expertise, creating systems that enhance rather than replace traditional web interaction patterns. This technical foundation positions Google to maintain search dominance while enabling new categories of information access and user engagement.

The success of this transformation will ultimately depend on sustained technical innovation, publisher ecosystem health maintenance, and continued user adoption of AI-enhanced search capabilities. The data presented by Fox and Reid suggests positive early indicators, but the long-term impact remains to be fully realized as Generative AI capabilities continue expanding and user behaviors further adapt to these new search paradigms.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Makebot: Your Partner in Generative AI Reinvention

Google’s Generative AI is reinventing how we search—what about how your business communicates? Nick Fox and Liz Reid revealed how Google is reshaping search with multi-modal AI, LLM integration, and advanced query understanding. At Makebot, we deliver the same cutting-edge innovation—LLM-powered chatbots, hybrid RAG systems, and multi-LLM platforms—built to enhance performance, reduce costs, and meet industry-specific needs.

This is where Makebot bridges the gap. We go beyond technology delivery, providing industry-specific LLM agents and end-to-end AI solutions tailored to your business strategy and goals.

Why Choose Makebot?

- Industry-Specific LLM Agents: From healthcare agents used by leading hospitals like Seoul National University Hospital and Gangnam Severance Hospital, to solutions for finance, retail, and the public sector, Makebot delivers customized AI you can trust.

- Ready-to-Deploy AI Solutions: Upgrade or replace your chatbot with BotGrade, enhance customer service with MagicTalk, process complex data with MagicSearch, or automate 24/7 voice consultations through MagicVoice.

- Rapid PoC to Deployment: Quickly transform ideas into proof of concept and scale to production—maximizing adoption speed and ROI.

- Global-Verified Technology: With HybridRAG, presented at SIGIR 2025, Makebot achieved a 26.6% accuracy improvement and up to 90% cost reduction, setting new global benchmarks. Backed by multiple LLM/RAG patents and trusted by over 1,000 enterprises, we deliver stability and proven impact.

Generative AI is no longer just an innovation—it’s a core growth engine. With Makebot, you can move strategically from exploration to execution and turn AI potential into measurable business results.

👉 Start your AI journey today: www.makebot.ai

📩 Contact us at b2b@makebot.ai to discuss how we can help you lead the AI shift.

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)