RAG vs. Fine-Tuning in Healthcare AI: Which Model Predicts Patient Outcomes Better?

RAG vs Fine-Tuning: Which AI model better predicts patient outcomes? Hybrid systems lead the way.

Healthcare organizations are racing to deploy advanced AI in healthcare systems that improve patient outcomes while controlling operational costs. With the rapid evolution of Large Language Models (LLMs), two dominant adaptation strategies have emerged: Retrieval Augmented Generation (RAG AI) and Fine-Tuning. Choosing between these methodologies—or blending them—has direct implications for clinical decision accuracy, patient safety, and the efficiency of modern healthcare delivery.

How Retrieval-Augmented Generation (RAG) Supports Healthcare AI Initiatives. Read more here!

Understanding the Technical Landscape

Modern LLMs represent a sharp departure from earlier rule-based expert systems. Their transformer architectures process billions of parameters, enabling nuanced understanding of medical literature, structured patient data, and complex clinical language. This computational power explains their rise in AI in healthcare, but also exposes a challenge: general-purpose models are not inherently designed for medicine.

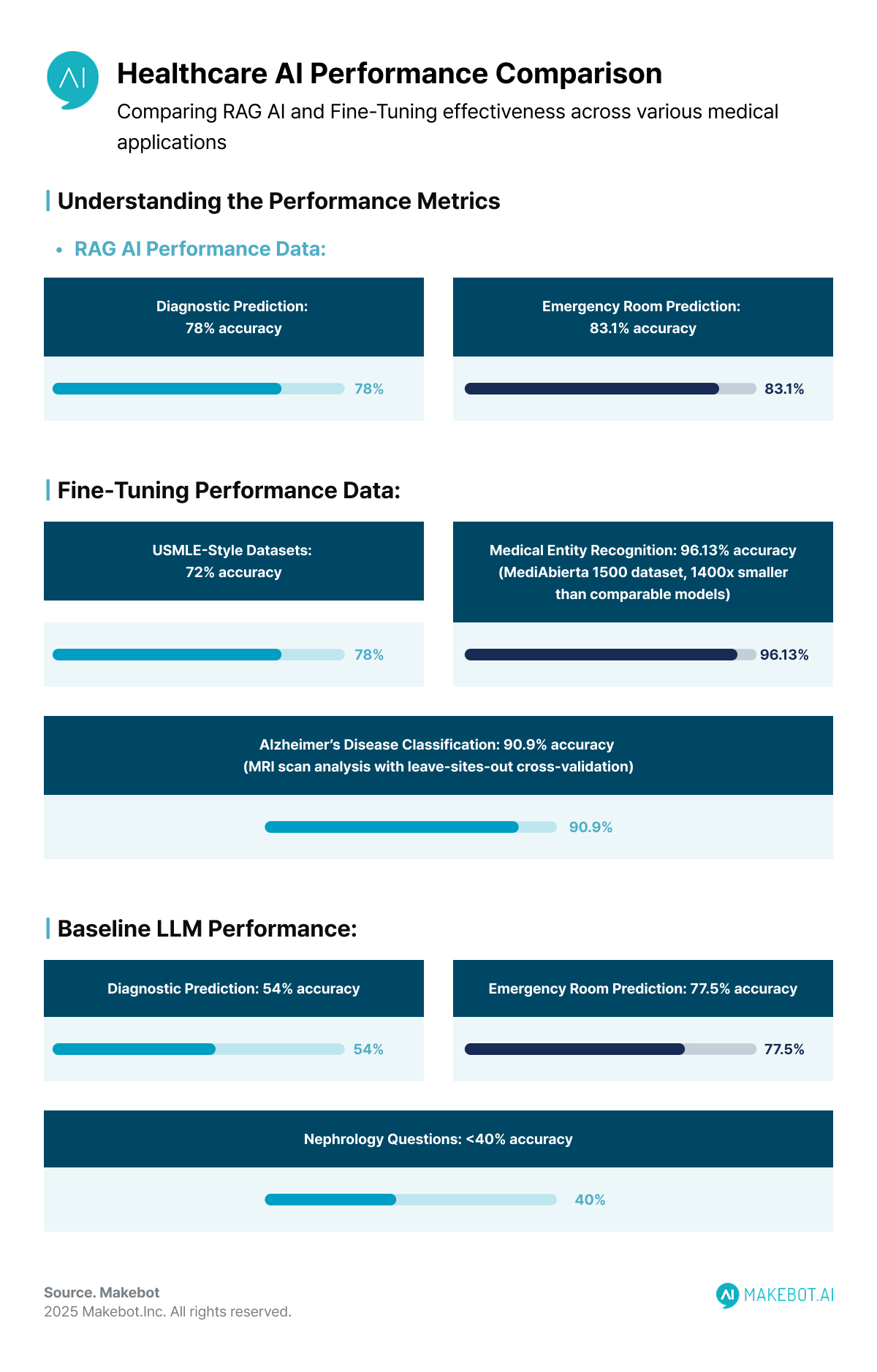

For example, studies show that baseline Large Language Models achieve less than 40% accuracy when answering nephrology-specific questions compared to structured literature reviews.

Such performance gaps highlight why domain-specific adaptation is not optional but essential—creating the space where RAG AI and Fine-Tuning deliver complementary value.

RAG AI: Real-Time Knowledge Integration

Retrieval Augmented Generation works by coupling a generative model with external, continuously updated data sources. Instead of relying solely on memorized knowledge, the model queries medical databases, journals, and clinical guidelines before generating its output.

Performance Metrics and Clinical Impact

- Accuracy gains: In diagnostic prediction, RAG AI increased accuracy to 78% compared with 54% for base LLMs.

- Emergency applications: RAG-enhanced models lifted emergency room prediction accuracy from 77.5% to 83.1% when integrating machine learning-based probabilities.

- Industry case study: Apollo 24|7’s use of MedPaLM with RAG powered a Clinical Intelligence Engine that delivers de-identified patient insights and access to the latest clinical research, showcasing RAG’s ability to stay current without retraining.

Technical Architecture Advantages

- Up-to-date information: Constant adaptation to evolving medical evidence, drug interactions, and treatment protocols.

- Traceability: Clinicians can validate outputs against cited sources, improving trust.

- Reduced hallucination risk: Grounding responses in verified literature lowers the chances of misleading outputs.

Yet, while RAG AI shines in staying current, it lacks the deep embedded expertise that Fine-Tuning provides.

Fine-Tuning: Deep Domain Specialization

Fine-Tuning adapts a pre-trained LLM by retraining it on specialized medical datasets, encoding domain knowledge directly into the model’s parameters. This makes it inherently fluent in clinical reasoning and medical workflows.

Quantified Performance Improvements

- The Med42 study showed Fine-Tuning achieved 72% accuracy on USMLE-style datasets, outperforming general-purpose LLMs.

- MediAlbertina 1500, a parameter-efficient model, reached 96.13% accuracy in medical entity recognition while being 1,400x smaller than comparable models.

Specialized Clinical Use Cases

- Echocardiography Reporting: EchoGPT, a Fine-Tuned LLaMA-2 variant, matched board-certified cardiologists in echocardiogram interpretation.

- Clinical Trial Screening: Fine-tuned deep learning models demonstrated strong diagnostic performance. In Alzheimer’s disease classification, transfer learning with MRI scans achieved 90.9% accuracy in leave-sites-out cross-validation, with sensitivity of 83.8% and specificity of 94.2%. Independent validation further confirmed robustness, reaching 94.5% (AIBL), 93.6% (MIRIAD), and 91.1% (OASIS) — all surpassing typical human screening benchmarks (~85–88%).

While Fine-Tuning delivers unmatched domain expertise, its static nature raises concerns when new medical knowledge rapidly evolves. This leads us to compare both approaches more directly.

Comparative Analysis: Clinical Decision Support

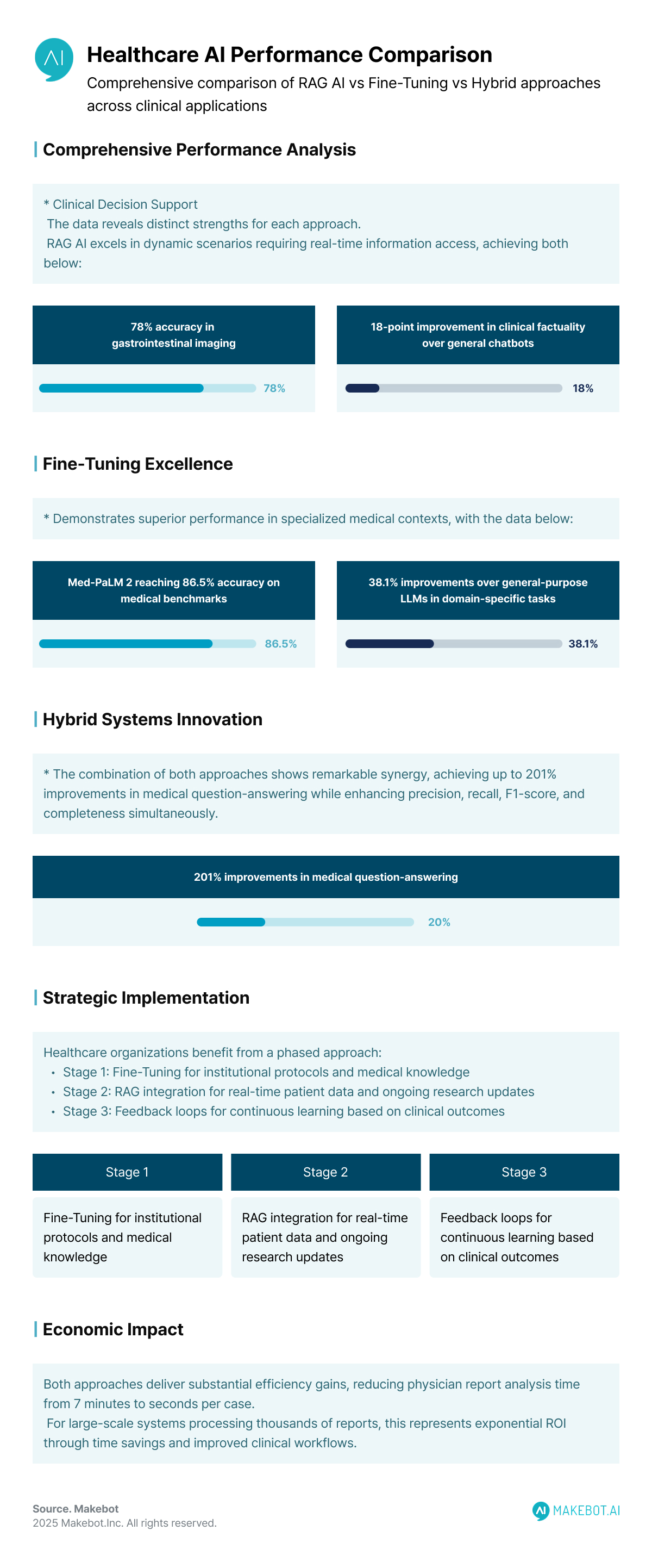

When evaluated head-to-head, RAG AI and Fine-Tuning show distinct strengths:

- Diagnostic accuracy: In gastrointestinal imaging, RAG-enhanced models reached 78% accuracy versus 54% for base LLMs. Meanwhile, Fine-Tuned Med-PaLM 2 achieved 86.5% accuracy on medical benchmarks, highlighting its depth in specialized contexts.

- Real-world applications: Almanac, a RAG-based system, outperformed ChatGPT in clinical factuality with an 18-point improvement, especially in cardiology (91% vs. 69%). Conversely, Fine-Tuned systems showed consistent gains in domain-specific tasks, in some cases improving accuracy by 38.1% compared to general-purpose LLMs.

The conclusion is clear: one approach is not inherently better—it depends on the clinical scenario.

The Ultimate Guide to RAG vs Fine-Tuning: Choosing the Right Method for Your LLM. Read more here!

Hybrid Approaches: Maximizing Clinical Value

Healthcare organizations increasingly combine RAG AI and Fine-Tuning to balance their strengths.

Performance Synergy

- Hybrid systems report up to 201% improvements in medical question-answering.

- Accuracy rises across precision, recall, F1-score, and completeness simultaneously.

- Training time is reduced without sacrificing domain specialization.

Strategic Deployment

- Stage 1: Fine-Tuning for institutional protocols and medical knowledge.

- Stage 2: RAG integration for real-time patient data and ongoing research updates.

- Stage 3: Feedback loops for continuous learning based on clinical outcomes.

This layered design brings organizations closer to clinically reliable, adaptive AI systems.

Cost-Benefit Analysis for Healthcare Organizations

Both strategies involve significant financial considerations:

- Fine-Tuning requires GPU clusters (tens of thousands per training run), expert engineering, and weeks of development.

- RAG AI shifts costs toward knowledge base curation, retrieval infrastructure, and ongoing data quality assurance.

Yet both approaches unlock major efficiency gains: a physician typically spends 7 minutes analyzing a report. Across 12,651 reports, that equals nearly 1,476 hours. AI reduces this to seconds per case—representing exponential ROI for large-scale systems.

Privacy and Regulatory Considerations

Healthcare AI implementation must address stringent privacy requirements under HIPAA and similar regulations:

Data Security Advantages

RAG systems maintain data separation, keeping sensitive patient information in secure databases rather than embedding it in model parameters. This approach facilitates compliance with privacy regulations and enables granular access control.

Fine-Tuning raises concerns about data exposure, as proprietary medical information becomes embedded in model weights. However, recent advances in federated learning and differential privacy techniques are addressing these challenges.

Future Directions and Recommendations

The trajectory of AI in healthcare suggests increasing sophistication:

- Technical innovations: Multimodal RAG integrating text, images, and video, and lightweight Fine-Tuning approaches like LoRA and QLoRA that lower computational costs.

- Clinical pathways:

- RAG AI for dynamic retrieval (e.g., patient education, real-time literature queries).

- Fine-Tuning for stable, domain-specific diagnostics and workflow automation.

- Hybrid models for end-to-end clinical decision support.

Ultimately, neither Fine-Tuning nor RAG AI alone offers a universal solution. Retrieval Augmented Generation delivers real-time adaptability, while Fine-Tuning ensures deep expertise in defined clinical tasks.

The future lies in hybrid ecosystems that combine the best of both—empowering clinicians with faster, safer, and more accurate patient outcome predictions.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Makebot Leads with Hybrid AI

Healthcare AI proves that neither RAG nor Fine-Tuning alone can solve every challenge—hybrid systems deliver the best of both worlds. That’s why Makebot’s HybridRAG Framework, showcased at SIGIR 2025, is designed to combine deep medical expertise with real-time adaptability. From hospitals to research institutions, we provide customized, domain-specific AI and chatbot solutions that ensure accuracy, compliance, and scalability.

This is where Makebot bridges the gap. We go beyond technology delivery, providing industry-specific LLM agents and end-to-end AI solutions tailored to your business strategy and goals.

Why Choose Makebot?

- Industry-Specific LLM Agents: From healthcare agents used by leading hospitals like Seoul National University Hospital and Gangnam Severance Hospital, to solutions for finance, retail, and the public sector, Makebot delivers customized AI you can trust.

- Ready-to-Deploy AI Solutions: Upgrade or replace your chatbot with BotGrade, enhance customer service with MagicTalk, process complex data with MagicSearch, or automate 24/7 voice consultations through MagicVoice.

- Rapid PoC to Deployment: Quickly transform ideas into proof of concept and scale to production—maximizing adoption speed and ROI.

- Global-Verified Technology: With HybridRAG, presented at SIGIR 2025, Makebot achieved a 26.6% accuracy improvement and up to 90% cost reduction, setting new global benchmarks. Backed by multiple LLM/RAG patents and trusted by over 1,000 enterprises, we deliver stability and proven impact.

Generative AI is no longer just an innovation—it’s a core growth engine. With Makebot, you can move strategically from exploration to execution and turn AI potential into measurable business results.

👉 Start your AI journey today: www.makebot.ai

📩 Contact us at b2b@makebot.ai to discuss how we can help you lead the AI shift.

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)