Why Most Enterprise Chatbot Projects Fail Before They Begin

Most enterprise chatbots fail early—Makebot’s HybridRAG™ turns pilots into scalable success.

The promise of Enterprise Chatbots has never been greater—yet the results remain sobering. Industry data shows that 70–85% of AI initiatives fail to achieve their intended outcomes, and nearly half of all proof-of-concept never reach production. For chatbots specifically, over 60% stall during pilot phases, never achieving scale or ROI.

This isn’t a technology failure. It’s a failure of strategy, data, integration, and governance—issues that emerge long before the first model is trained or the first LLM prompt is written.

“AI projects don’t collapse at deployment—they collapse at definition.”

This article dissects the foundational causes behind these failures and offers a practical lens for executives seeking to transform AI Chatbot Development from an experiment into an enterprise-wide capability.

Accenture: Companies with AI-led Processes Outperform Peers by 2.5x in Revenue Growth. Read more here!

1. The Strategic Misalignment

Building Solutions Without Defining Problems

According to a recent MIT study, 95% of generative AI projects fail to deliver significant value, with S&P Global reporting that 42% of companies abandoned most AI initiatives in 2025—more than double the previous year. The reason isn’t algorithmic—it’s strategic. Organizations build chatbots to “stay competitive,” not to solve defined, high-value pain points. In one success story, Lumen Technologies identified a $50-million productivity loss due to sales teams spending hours on research before designing an AI assistant.

By contrast, many firms deploy In-House AI systems without metrics, targets, or cost-benefit models—producing technically impressive tools with no business relevance.

“The wrong problem solved perfectly is still a failure.”

Architecture Implication:

Before coding, leaders must link chatbot purposes to quantifiable business outcomes—process inefficiencies, opportunity costs, and measurable ROI. Without this foundation, even the best LLM-powered solution is just another proof-of-concept.

2. The Data Foundation Crisis

Why RAG Systems Fail Before Launch

Forbes reports that 85% of failed AI projects cite data quality or availability as a core issue, yet Informatica's 2025 CDO Insights survey identifies data quality and readiness (43%) and lack of technical maturity (43%) as top obstacles to AI success. For RAG (Retrieval-Augmented Generation) architectures, poor data hygiene guarantees failure.

High-performing RAG systems require:

- Structural consistency – standardized formats and taxonomies

- Semantic richness – context-aware metadata

- Temporal relevance – up-to-date, policy-aligned data

In one healthcare deployment, improving data structure alone raised chatbot accuracy from 60% to 90%. Yet most enterprises underestimate data readiness by a factor of three, discovering governance gaps only after deadlines are missed.

“Bad data doesn’t just slow AI—it blinds it.”

Architecture Implication:

Dedicate 50–70% of project time to data preparation. Conduct audits, implement vector databases for semantic search, enforce metadata standards, and establish continuous data-quality monitoring. AI Development succeeds only on data discipline.

3. The Integration Nightmare

When Legacy Systems Meet Agentic AI

Few prototypes survive contact with real infrastructure. Modern Enterprise AI chatbots must interact seamlessly with CRMs, ERPs, and authentication layers—each introducing new failure points like token management, version mismatches, and rate limits.

“Integration, not intelligence, determines scalability.”

Agentic AI compounds this challenge. Unlike reactive bots, agentic systems observe, plan, and act autonomously—requiring persistent memory, orchestration layers, and real-time decision logic. Gartner predicts over 40% of agentic AI projects will be canceled by 2027 due to architectural immaturity.

Architecture Implication:

Adopt an agentic AI mesh—a composable, vendor-agnostic framework supporting reasoning and collaboration across systems. Plan for middleware orchestration, cross-API governance, and human-in-the-loop escalation before deployment.

4. The Governance Vacuum

When Chatbots Become Legal Liabilities

From Air Canada’s bereavement-fare lawsuit to NYC’s MyCity chatbot giving illegal HR advice, governance failures have become corporate liabilities. These incidents reveal a governance gap: organizations deploy LLM-powered systems capable of generating authoritative-sounding responses without establishing verification mechanisms, accuracy thresholds, or escalation protocols for high-stakes decisions. According to enterprise AI research, only 29% of companies report having responsible AI programs that are more than shallow or poorly integrated.

“A confident answer isn’t the same as a correct one.”

Generative AI models are probabilistic—they hallucinate. Even RAG-based systems can misinterpret retrieved context or fabricate citations. Without safeguards, enterprises risk regulatory breaches and reputational damage.

Architecture Implication:

Implement multi-layered governance—content verification, confidence thresholds triggering human review, bias audits, and full audit logging. Define ownership of AI-generated outputs and remediation procedures for inevitable errors.

5. The Organizational Change Failure

Technology Without Transformation

Most chatbot failures are cultural, not technical. A 2024 research shows that lack of reskilling and unmanaged change cause 70% of AI rollouts to stall. Employees resist tools they don’t understand; managers ignore workflows they didn’t design.

“You can’t automate a culture that refuses to change.”

Telstra succeeded by positioning its chatbot as augmentation, not replacement—boosting agent productivity by 20%. Contrast that with firms where AI announcements sparked layoffs and fear, ensuring minimal adoption.

Architecture Implication:

Allocate 20–30% of project resources to change management—training, communication, KPI redesign, and leadership advocacy. AI adoption scales only when humans see themselves in the transformation.

Top Emerging AI Technologies 2025 – Forrester Report. More here!

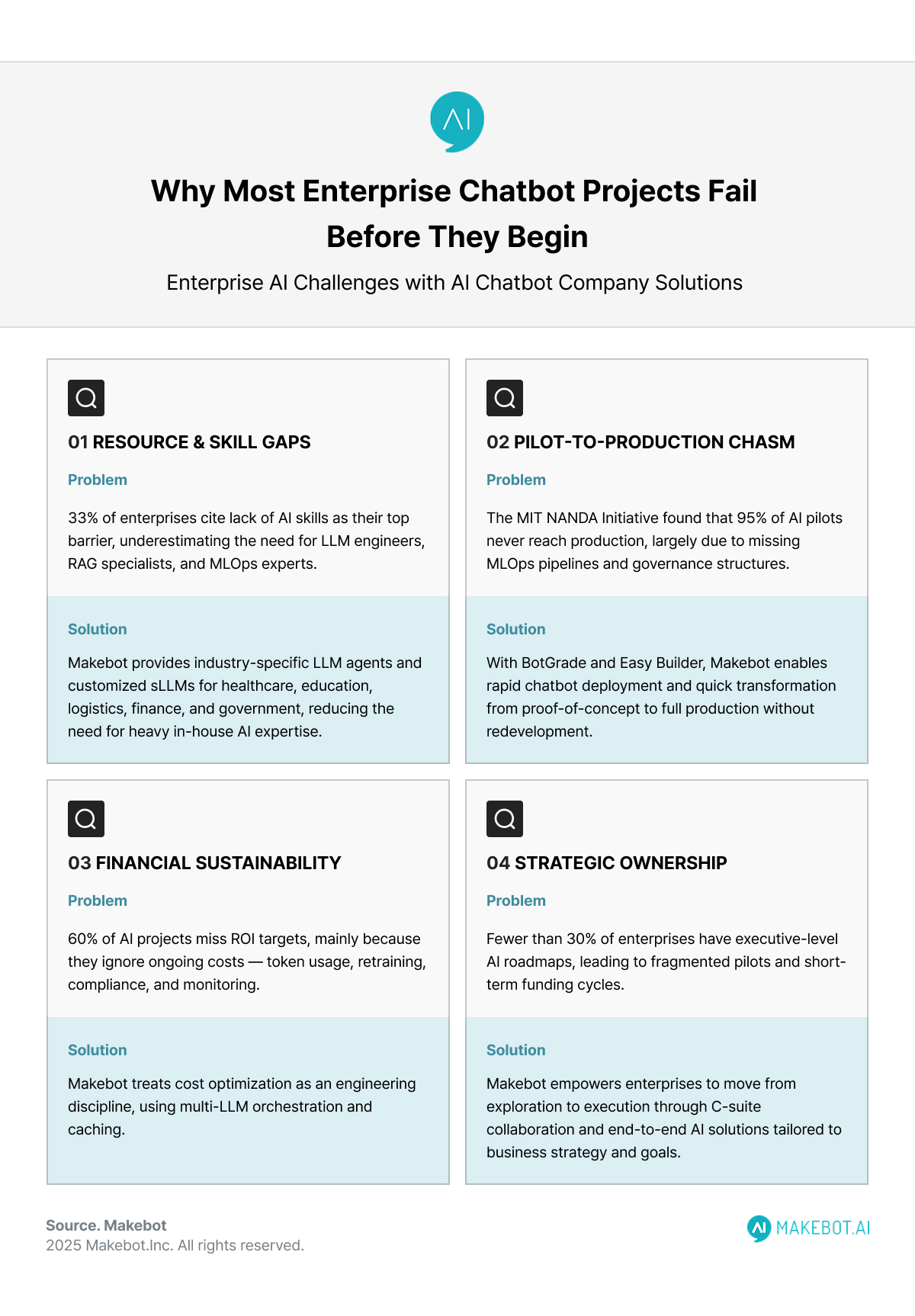

6. The Resource and Skill Gap

Why In-House AI Teams Struggle

According to a study , 33% of enterprises cite insufficient AI expertise as their biggest deployment challenge. Building an Enterprise Chatbot requires more than programmers—it demands a multidisciplinary team of LLM engineers, RAG specialists, DevOps, data scientists, security experts, and business leaders who understand process logic. Yet most organizations assume existing IT teams can simply “learn AI,” underestimating the complexity of Generative AI development. The result is stalled projects and fragmented architectures.

“AI doesn’t fail for lack of code—it fails for lack of capability.”

Beyond talent scarcity, costs scale faster than anticipated. LLM API usage, model retraining, vector database maintenance, and compliance monitoring often push annual operating expenses beyond the original build budget. Unlike traditional IT systems with 10–20% annual upkeep, AI Development can require 50–100% recurring costs, especially for high-volume enterprise chatbots.

Architecture Implication:

Enterprises should adopt a hybrid model—partner externally for technical depth while developing internal AI translators who bridge strategy and engineering. This approach balances innovation, cost control, and long-term scalability, transforming AI from an experiment into a sustainable enterprise capability.

7. The Pilot-to-Production Chasm

Why POCs Don’t Scale

Enterprises are collectively spending $30–40 billion on Generative AI pilots that fail to deliver measurable business impact. The MIT NANDA Initiative found that 95% of projects never reach production, with only 4% creating significant enterprise value. BCG’s global survey confirms that just 26% of companies possess the capabilities to move beyond proof-of-concept, while IDC reports that for every 33 pilots launched, only 4 scale successfully.

“Pilot purgatory is where innovation dies and budgets go to burn.”

This failure stems not from weak LLMs but from weak execution. Organizations confuse prototypes, POCs, MVPs, and pilots—each requiring distinct readiness levels, governance, and metrics. As data gaps, integration hurdles, and cost overruns mount, executives lose patience and funding evaporates.

Architecture Implication:

Scalable AI Development demands industrialized practices—MLOps pipelines, CI/CD automation, version control, and performance monitoring that measure both technical and business KPIs. Enterprises must define SLAs, governance structures, and rollback procedures from day one. Treat AI Scalability as a design principle, not a last-minute concern.

Companies that adopt this disciplined approach join the successful 5% turning AI pilots into profitable operations—while the rest remain trapped in an endless cycle of proof without progress.

8. The Financial Reality

When ROI Models Ignore Hidden Costs

Most Enterprise AI budgets collapse not because of overspending, but because of under-planning. Initial estimates focus on AI Development costs—engineering time, LLM licensing, and cloud infrastructure—while ignoring the recurring expenses that determine long-term viability. According to Deloitte’s Financial AI Adoption Report (2024), only 38% of AI projects in finance meet or exceed ROI expectations; Over 60% of firms report significant implementation delays.

These include data-security systems, compliance audits for GDPR and industry regulations, and content-management teams updating knowledge bases as policies evolve. Even successful Enterprise Chatbots demand human-in-the-loop verification for sensitive outputs, continuous retraining, and 24/7 monitoring for bias or drift.

“AI doesn’t just cost to build—it costs to keep honest.”

The shift to consumption-based pricing amplifies risk. Every token processed by an LLM adds cost, and a single customer query may trigger multiple API calls for classification, retrieval, and generation. At scale, this compounds into millions in unplanned expenses.

Architecture Implication:

Financial sustainability must be designed, not discovered. Use caching to minimize redundant calls, pair lightweight models for simple queries with high-capacity LLMs for complex reasoning, and continuously monitor token consumption. Cost optimization is now a core engineering discipline, not a post-finance adjustment.

9. The Strategic Imperative

Moving Beyond Pilot Purgatory

Breaking free from “pilot purgatory” demands what most enterprises lack—CEO-level ownership of AI strategy. Fewer than 30 percent of organizations have executive-sponsored AI roadmaps, resulting in disconnected pilots, short-term funding, and no enterprise coordination. Only top-down commitment can turn experimentation into transformation. The CEO must declare that Enterprise AI is a business priority, not an IT project, and drive unified investment, governance, and cross-functional accountability.

“AI transformation doesn’t start in the lab—it starts in the boardroom.”

The shift now required is from isolated use cases to redesigned business processes. Leaders should not ask “Where can we use AI?” but “How would this function operate if agentic AI handled 60 percent of it?” That vision reframes work around human judgment and creative oversight while automating repetitive cognition.

Architecture Implication:

Strategic success requires comprehensive AI readiness assessments, integrated roadmaps aligning business priorities with data and infrastructure maturity, and cross-functional AI councils linking business, IT, and governance. By measuring value through enterprise KPIs—not technical metrics—organizations evolve from pilots to platforms.

In short, sustainable advantage belongs to companies treating AI as an organizational operating system, not as another technology experiment.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

How Makebot’s Approach Solves These Challenges

Makebot bridges the gap between AI experimentation and enterprise-scale deployment with proven, research-backed, and industry-tested frameworks—combining patented technology, verified performance, and end-to-end integration.

Strategic & Business Alignment

Makebot conducts AI Readiness and Business Process Audits that directly align chatbot goals with measurable KPIs, ensuring every deployment contributes to productivity, customer engagement, or operational efficiency.

Data Readiness & Reliability

Powered by the patented HybridRAG™ framework (Patent No. 2024-0933135), Makebot automates data normalization and semantic enrichment—achieving a 26.6% increase in F1 accuracy and up to 90% cost reduction, as validated at SIGIR 2025 in Padua, Italy.

Seamless Integration & Scalability

Through platforms like BotGrade, Easy Builder, and LLM Builder, Makebot enables rapid, low-code integration of chatbots across CRMs, ERPs, and internal databases, supporting multiple models (GPT-5, Claude, Gemini, HyperCLOVA X, and Makebot sLLMs) under one ecosystem.

Responsible AI Governance

Makebot ensures technical stability and compliance through verified enterprise-grade systems trusted by over 1,000 Korean companies, embedding audit logging, bias checking, and confidence-based human review within each AI workflow.

Human Adoption & Change Management

Deployed in top institutions such as Seoul National University Hospital and Gangnam Severance Hospital, Makebot promotes AI as augmentation, not automation—enhancing productivity, collaboration, and user trust.

Ready-to-Deploy AI Solutions

Makebot’s portfolio includes:

- BotGrade: Upgrade existing chatbots with LLM capability.

- MagicTalk: Deliver multilingual, context-aware conversational AI.

- MagicSearch: Provide deep semantic document retrieval.

- MagicVoice: Automate 24/7 voice consultations and call centers.

- LLM Builder: Fine-tune or deploy multiple LLMs for domain-specific use.

Skill & Resource Augmentation

Through industry-specific LLM agents and specialized small LLMs (sLLM 8B/70B) for healthcare, finance, education, logistics, and government, Makebot reduces the need for large internal AI teams while maintaining domain precision.

Rapid PoC to Deployment

Makebot’s Rapid Deployment Frameworks transform proofs of concept into full-scale production in weeks, with built-in MLOps automation, performance dashboards, and continuous optimization—eliminating the pilot-to-production gap.

Financial Sustainability

By shifting computation offline through HybridRAG’s pre-generated QA pipeline, Makebot demonstrated cost efficiency—130,000 QA pairs generated across seven domains for just $24.50—proving sustainable scalability even in high-volume environments.

Executive-Level AI Strategy

Makebot partners with executives to design C-suite-driven AI strategies, unifying business priorities, governance, and data maturity under a single enterprise roadmap—transforming fragmented pilots into enterprise-wide AI ecosystems.

Conclusion

Enterprise AI projects fail not because the technology is immature—but because organizations start unprepared. They skip the groundwork: defining measurable goals, cleansing data, planning integration, enforcing governance, and preparing their people.

The path forward demands discipline:

- Treat AI as business transformation, not IT innovation.

- Anchor every chatbot in real metrics.

- Build data foundations before development.

- Design for governance, scalability, and adoption from the start.

Generative AI, powered by advanced LLMs, can transform customer experience and operational efficiency—but only for those who respect the foundations. The rest will continue to fund the failure statistics, one abandoned pilot at a time.

Transform AI Failure into Scalable Success with Makebot

Most Enterprise Chatbot projects fail long before launch—buried under unclear goals, poor data foundations, and fragmented architectures. Makebot was built to solve exactly these problems. By combining domain-specific LLM expertise with production-ready frameworks like HybridRAG, Makebot bridges the gap between AI Development and real-world business outcomes.

Whether you’re in healthcare, finance, retail, or the public sector, Makebot delivers industry-verified AI solutions that move beyond experimentation—ensuring your chatbot strategy translates into measurable ROI, faster deployment, and full AI Governance compliance.

With over 1,000 enterprise clients and a 26.6% accuracy improvement showcased at SIGIR 2025, Makebot’s proven platforms—BotGrade, MagicTalk, MagicSearch, and MagicVoice—empower you to transition from pilot to production seamlessly. Backed by patented RAG (Retrieval-Augmented Generation) architectures and end-to-end deployment support, we help organizations eliminate “pilot purgatory” and achieve scalable, secure, and cost-efficient AI Implementation. The next wave of Enterprise AI success begins not with another pilot, but with a trusted partner who builds for scale from day one.

👉 Start transforming your chatbot strategy today at www.makebot.ai or email b2b@makebot.ai to connect with our enterprise AI specialists.

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)