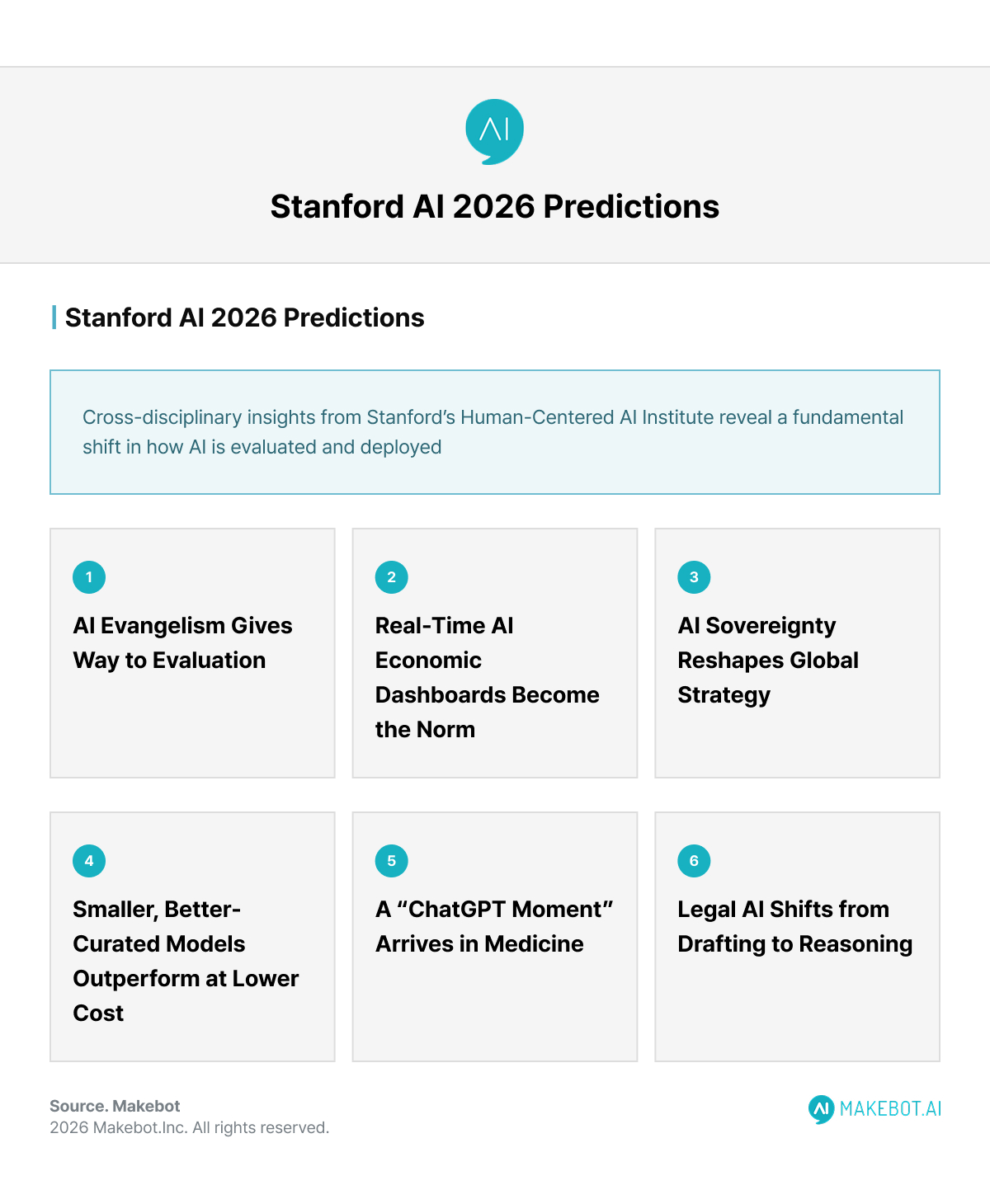

Stanford AI Experts’ Predictions in 2026

AI enters a proof-driven phase where ROI, evaluation, and real workflows matter more than hype.

By 2026, artificial intelligence will move beyond a phase driven by expectations and possibilities and enter a stage centered on verifiable performance and real value creation. According to Stanford AI researchers, the AI industry is no longer focused on emphasizing technical potential, but is shifting toward being evaluated based on actual results.

Models, systems, and investment decisions will no longer be judged by demos or promises, but by measurable outcomes. Across healthcare, law, economics, and computer science, Stanford AI researchers predict the following shifts:

- Stricter verification of productivity improvements

- Expansion of near real-time analysis of AI’s economic impact

- Increased executive focus on AI ROI (return on investment)

- Growing importance of alignment between Generative AI capabilities and real operational workflows

These 2026 AI outlooks indicate that AI has entered a clear phase of maturity. Benchmarks will matter more than bold claims, and real performance will take priority over abstract visions. The distinction between areas where AI creates tangible value and those where it does not is expected to become increasingly clear.

Interview Feature: Why Companies Are Betting Big on Generative AI. Read more here!

Glossary of Key Terms

Generative AI. AI systems capable of producing new content—such as text, summaries, or recommendations—based on learned patterns. In enterprise settings, its value depends on measurable performance within real workflows.

Large Language Model (LLM). A class of Generative AI models trained to understand and generate natural language. LLM effectiveness varies widely by task, cost, and deployment context.

AI ROI. A measurement framework evaluating whether AI systems deliver tangible productivity gains, cost savings, or outcome improvements relative to their operational and infrastructure costs.

Evaluation Frameworks. Structured methods—benchmarks, dashboards, and task-level metrics—used to assess real-world AI performance beyond lab accuracy or demo success.

Human-in-the-Loop AI. AI systems designed to support human judgment rather than replace it, maintaining accountability and oversight in high-stakes or regulated environments.

Why Stanford’s Voice Matters for 2026

Stanford’s Human-Centered AI (HAI) Institute occupies a unique position in the AI ecosystem. Unlike vendor-driven forecasts or consultancy outlooks, Stanford’s perspectives are grounded in cross-disciplinary research spanning computer science, medicine, law, economics, and public policy. That breadth matters in 2026, a year many Stanford researchers describe as a “reality check” for artificial intelligence.

After years of rapid investment and speculative infrastructure buildouts, Stanford AI Experts argue that the central question has changed. It is no longer “Can AI do this?” but “How well does it perform, at what cost, and for whom?” This framing reflects a shift from possibility to proof, with implications for enterprises, governments, and workers alike.

Key Predictions from Stanford AI Experts for 2026

1. AI Evangelism Gives Way to Evaluation

Prediction

In 2026, AI adoption will be governed by benchmarks, dashboards, and outcome-based evaluation rather than hype-driven pilots.

Technical rationale

Stanford researchers emphasize that most large-scale deployments since 2023 lacked standardized evaluation frameworks. New approaches—such as task-level productivity tracking, domain-specific benchmarks, and continuous monitoring—are emerging to assess real-world performance rather than lab accuracy alone.

Supporting data

James Landay, co-director of Stanford HAI, predicts that companies will increasingly admit AI has not delivered broad productivity gains, except in targeted domains like programming and call centers. Many failed projects will surface publicly in 2026.

Business implication

Enterprises will face pressure to justify AI spend with concrete metrics tied to AI ROI, accelerating the retirement of underperforming systems.

Timeline

- H1 2026: Widespread post-mortems on earlier AI pilots

- End of 2026: Evaluation frameworks become standard procurement requirements

2. Real-Time AI Economic Dashboards Become the Norm

Prediction

AI’s economic impact will be tracked in near real time, similar to financial or operational dashboards.

Technical rationale

Drawing on payroll, platform usage, and task-level data, these dashboards measure where AI boosts productivity, displaces labor, or creates new roles. The approach mirrors national accounting systems but operates at much higher frequency.

Supporting data

Erik Brynjolfsson and the Stanford Digital Economy Lab describe “AI economic dashboards” that update monthly rather than years later. Early work with ADP already shows weaker employment outcomes for early-career workers in AI-exposed roles.

Business implication

Executives will monitor AI exposure metrics alongside revenue and cost dashboards, tightening the feedback loop between AI capability and organizational outcomes.

Timeline

- Mid-2026: Early enterprise adoption

- End of 2026: Dashboards influence workforce and investment decisions

3. AI Sovereignty Reshapes Global Strategy

Prediction

Nations will accelerate efforts to control AI infrastructure, data, and deployment models.

Technical rationale

AI sovereignty can take multiple forms: building domestic large language models, running foreign models on local GPUs, or enforcing data localization. Each approach balances control, cost, and technical complexity.

Supporting data

Stanford HAI notes sustained investment in national AI data centers in regions such as the UAE and South Korea, signaling long-term geopolitical competition around computers and data.

Business implication

Multinational firms must adapt AI architectures to comply with regional sovereignty requirements, affecting deployment speed and total cost of ownership.

Timeline

- 2026: Sovereignty becomes a strategic constraint in global AI rollouts

Redefining talent in the AI era: From Tool Proficiency to Enterprise Advantage. More here!

4. Smaller, Better-Curated Models Outperform at Lower Cost

Prediction

The era of “bigger is always better” gives way to optimized, domain-specific models.

Technical rationale

Stanford researchers highlight “peak data” and diminishing returns from scaling. Advances in architecture, training efficiency, and dataset curation allow smaller models to match or exceed large models on specific tasks.

Supporting data

Landay notes increasing effort toward curating high-quality, smaller datasets as data scarcity and quality issues constrain brute-force scaling.

Business implication

Organizations can improve AI ROI by deploying task-specific models that reduce inference costs and operational complexity.

Timeline

- H1 2026: Pilots with small language models

- End of 2026: Mainstream enterprise adoption

5. A “ChatGPT Moment” Arrives in Medicine

Prediction

Medical AI reaches a tipping point as self-supervised learning lowers development costs and expands diagnostic capabilities.

Technical rationale

Self-supervised methods eliminate the need for extensive expert labeling, enabling large-scale biomedical foundation models trained on diverse clinical data while preserving privacy.

Supporting data

Curtis Langlotz predicts that AI models trained on massive healthcare datasets will rival chatbot-scale systems, improving accuracy in radiology, pathology, oncology, and rare disease detection.

Business implication

Healthcare providers will prioritize systems that demonstrate measurable improvements in workflow efficiency, patient outcomes, and cost containment.

Timeline

- 2026: Rapid expansion of clinical pilots

- Late 2026: Early standardization in major health systems

6. Legal AI Shifts from Drafting to Reasoning

Prediction

Legal AI systems will be evaluated on rigorous, outcome-based metrics rather than surface fluency.

Technical rationale

New benchmarks focus on multi-document reasoning, citation integrity, and provenance tracking, supported by methods such as LLM-as-judge and pairwise preference ranking.

Supporting data

Julian Nyarko predicts standardized evaluations tied to legal outcomes—accuracy, privilege exposure, and turnaround time—will become table stakes in 2026.

Business implication

Law firms unable to demonstrate defensible AI ROI risk losing clients to more transparent, metrics-driven competitors.

Timeline

- 2026: Broad adoption in in-house legal teams

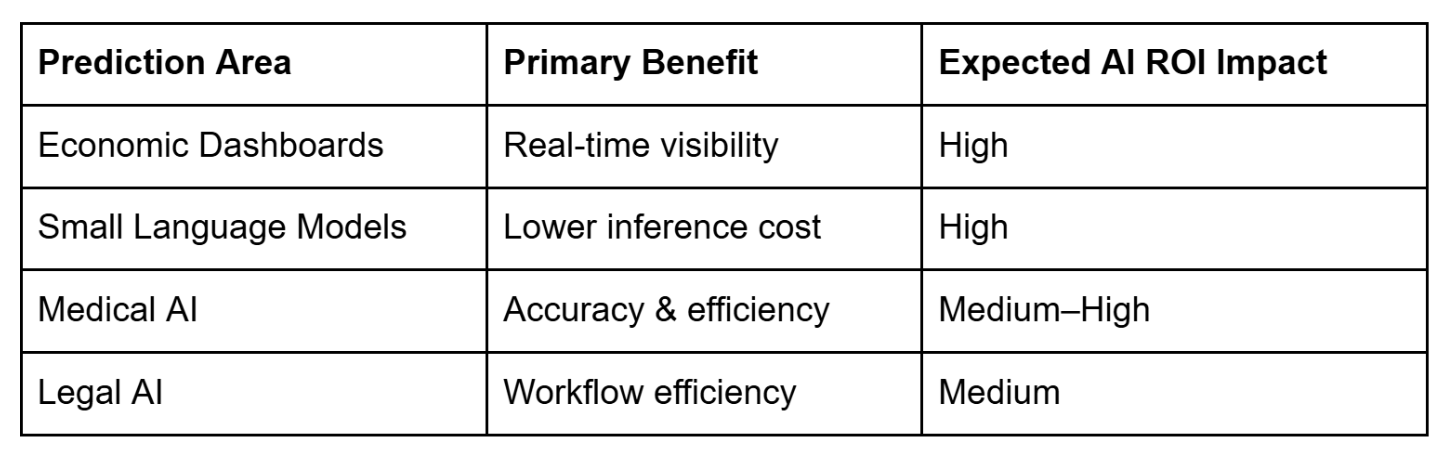

Comparative View: Predicted AI ROI Impact

Risks, Caveats, and Counterarguments

Despite optimism, Stanford AI Experts emphasize realism. Many AI projects will fail, environmental costs from compute-intensive models remain high, and poorly governed deployments can misdirect or deskill workers. Angèle Christin cautions that AI’s impact is often moderate rather than transformative—incremental gains mixed with new frictions.

Another risk lies in consumer-facing Generative AI tools bypassing institutional oversight, particularly in healthcare. Free, unregulated applications may outpace formal evaluation frameworks, raising safety and accountability concerns.

Practical Takeaways for Leaders

- Treat AI as operational infrastructure, not experimentation

- Demand task-level metrics tied to AI ROI

- Favor smaller, well-curated models over maximal scale

- Prepare for AI sovereignty and regulatory fragmentation

- Invest in human-centered design to preserve long-term skills

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Conclusion

The core message of Stanford AI researchers’ 2026 outlook is clear: AI has entered a phase where measurement matters more than momentum. Evangelism is giving way to evaluation, and Generative AI will only remain viable if it delivers measurable outcomes in real operational environments.

This shift redefines AI not as isolated tools or projects, but as organizational infrastructure that must be measured, managed, and integrated. AI economic dashboards and performance metrics are no longer optional—they are becoming essential.

Makebot has embedded AI economic metrics across its MagicSuites platform, integrating AI chat consulting, AICC contact centers, AI search, chatbots, and work tools into a single system that consistently measures utilization, cost, and contribution.

The competitive edge of AI strategy now lies not in model selection, but in measurement, operation, and integration capability.

👉 www.makebot.ai | 📩 b2b@makebot.ai

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)