Open-Source vs Closed-Source LLMs: Why the Strategic Divide Matters More This Year

How cost, governance, and architecture are reshaping enterprise LLM strategy.

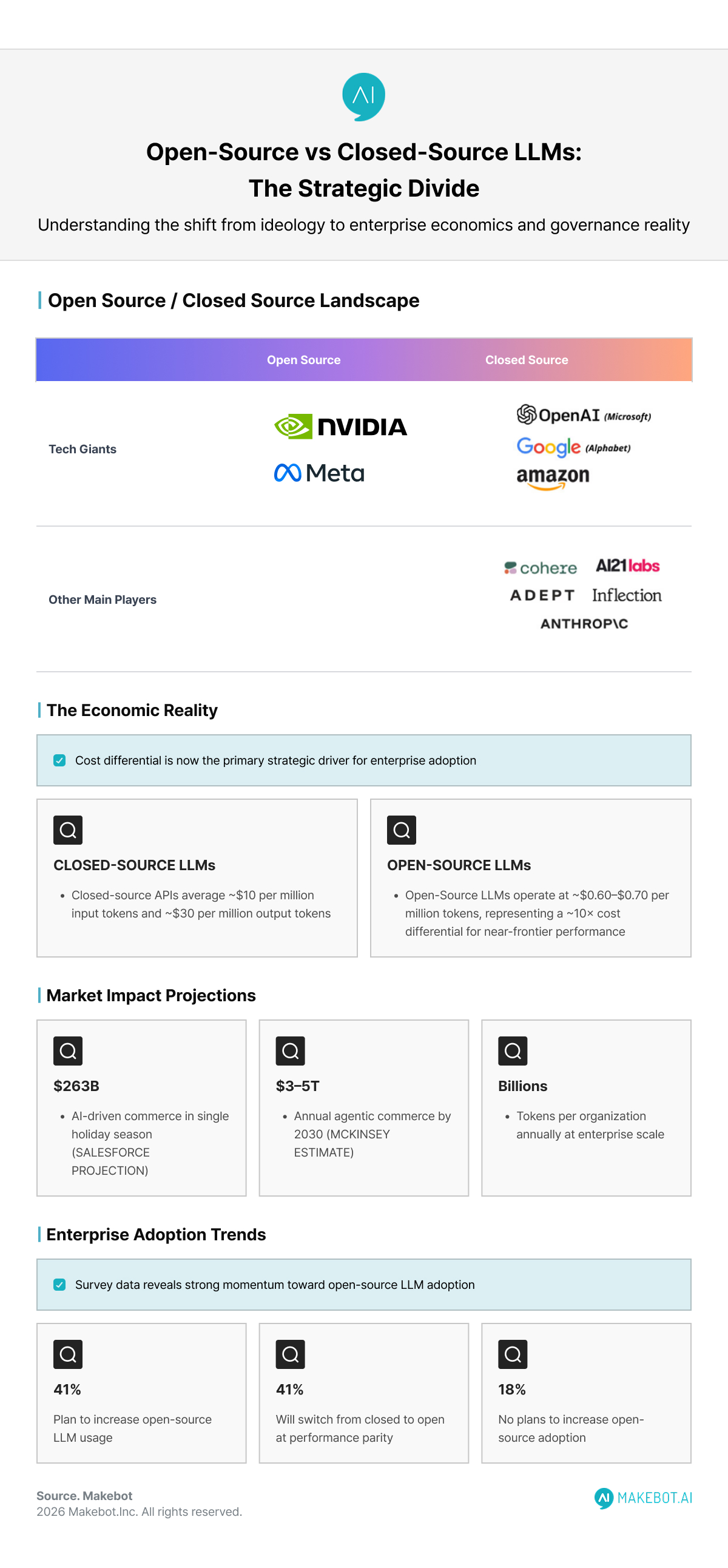

The tension between Open-Source LLMs and Closed-Source LLMs has existed since the first foundation models entered production. What makes this year different is that the debate has moved decisively from ideology into enterprise economics and governance reality.

According to multiple industry surveys, 2023–2024 was dominated by closed-source usage (≈80–90% market share). Entering this year, that dominance is eroding as Generative AI workloads scale beyond pilots into persistent, high-volume systems

This shift forces organizations to reassess not only model quality, but cost predictability, data sovereignty, regulatory exposure, and long-term lock-in—a reassessment that begins at the architectural layer.

Beyond the Build: Uncovering the Hidden Costs of In-House LLM Chatbot Development. Read more here!

Architecture & Performance: Frontier Leadership vs Practical Parity

Closed models still lead on aggregate benchmarks, particularly in generalized reasoning and multi-step tasks. For example:

- GPT-4 class models exceed 90% pass rates on HumanEval-style coding benchmarks

- Proprietary systems continue to top composite ELO-style evaluations across reasoning and instruction following

However, the rate of convergence is now measurable:

- Expert surveys estimate the open-to-closed performance lag has shrunk to ~12–16 months, down from 24+ months in early 2023

- Open models such as LLaMA-3-70B, Qwen-72B, and DeepSeek R1 now match or exceed closed models in specific domains, particularly coding, math, and retrieval-augmented tasks

Critically, this convergence coincides with specialization, not commoditization. Instead of chasing a single “best” Large Language Models benchmark, the ecosystem is fragmenting into domain-optimized systems, which shifts the strategic question from “who is best?” to “what is sufficient—and at what cost?”

That reframing leads directly into economics.

Economics: Cost Curves Are Now the Primary Driver

The most decisive data point this year is cost.

- Closed-source APIs average ~$10 per million input tokens and ~$30 per million output tokens

- Comparable Open-Source LLMs operate at ~$0.60–$0.70 per million tokens, representing a ~10× cost differential for near-frontier performance

At an experimental scale, this difference is marginal. At enterprise scale, it becomes existential:

- Salesforce projects $263 billion in AI-driven commerce in a single holiday season

- McKinsey estimates $3–5 trillion annually in agentic commerce by 2030

- These projections imply billions of tokens per organization per year, making API-only strategies increasingly fragile

Enterprise sentiment reflects this math:

- 41% of enterprises plan to increase open-source LLM usage

- 41% will switch from closed to open once performance parity is reached

- Only 18% report no plans to increase open-source adoption

- Expected steady-state usage: ~50/50 open vs closed, down from ~90% closed in 2023

Yet lower token costs do not automatically mean lower total cost—because savings shift the burden toward infrastructure, orchestration, and governance, which reframes the discussion around control.

Governance, Sovereignty, and Regulation: Control Is Becoming Strategic

Regulatory divergence is now a material architectural constraint.

- The EU AI Act explicitly recognizes open-source foundation models as an economic public good, while still enforcing risk-tier obligations

- U.S. policy has shifted toward light-touch, innovation-first regulation, increasing pressure on enterprises to self-govern

- China has emerged as the largest producer of high-impact AI research, with a strong emphasis on open-weight releases

Sovereignty is no longer abstract:

- Countries are investing billions in national data centers to run models locally

- Enterprises increasingly require on-prem or private-cloud LLM deployment to satisfy data residency rules

- Open-weight models enable this directly; closed APIs often do not

This is why governance leaders increasingly value auditability over abstraction—a shift that elevates system architecture above model branding and forces organizations to think about execution.

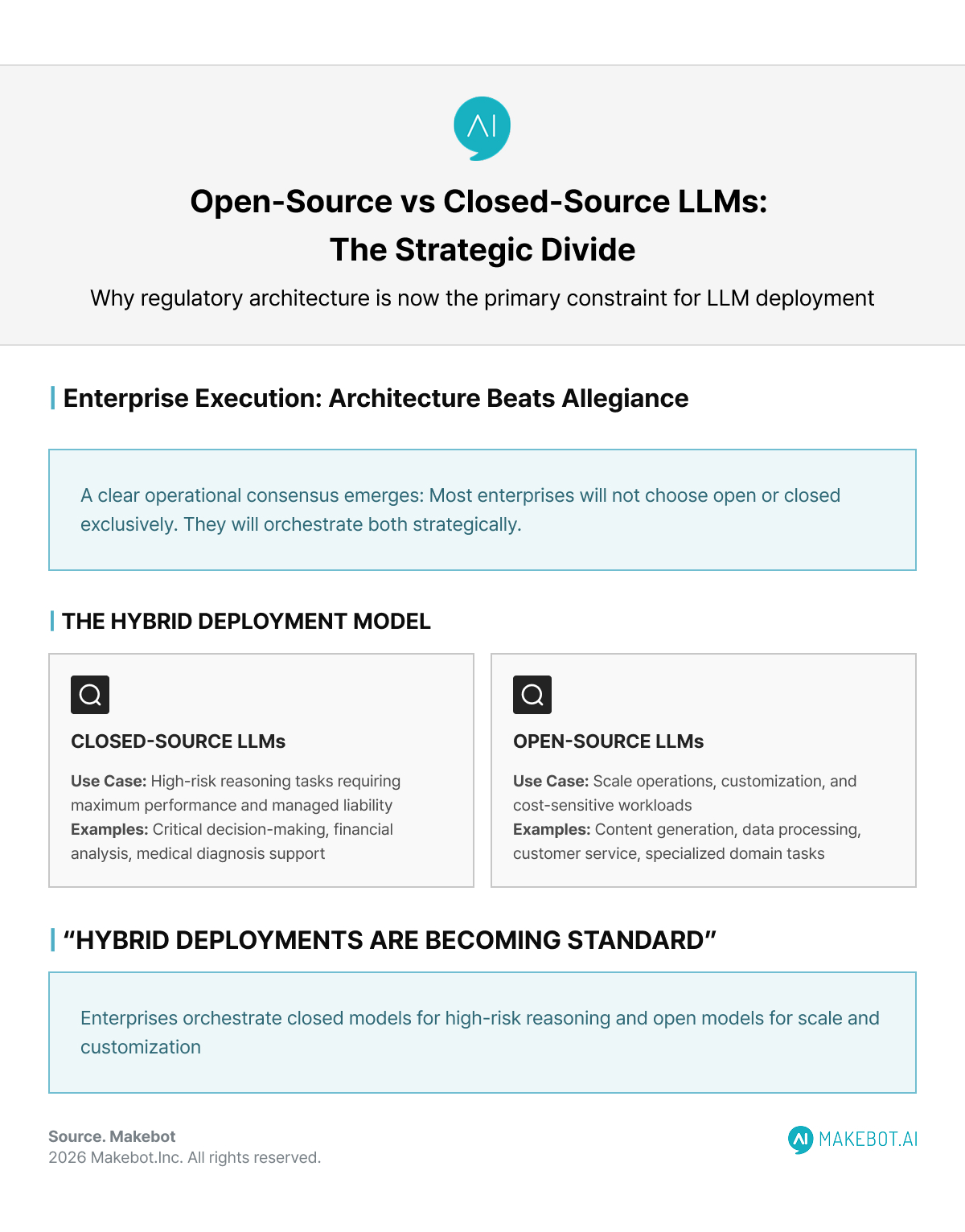

Enterprise Execution: Architecture Beats Allegiance

A clear operational consensus emerges: Most enterprises will not choose open or closed. They will orchestrate both.

Evidence supporting this:

- Stanford HAI researchers report limited productivity gains outside targeted domains, pushing firms to optimize architectures rather than chase larger models

- MIT Technology Review highlights reasoning systems paired with verification layers, not monolithic models, as the next phase

- Hybrid deployments—closed models for high-risk reasoning, open models for scale and customization—are becoming standard

This is where platforms like Makebot enter the discussion—not as market definers, but as examples of how these trade-offs can be operationalized. Makebot’s HybridRAG framework, presented at SIGIR 2025, reflects the industry’s shift away from brute-force inference toward architectural efficiency. By moving heavy LLM usage into offline QA pre-generation and reserving live inference for fallback scenarios, HybridRAG reduces both cost volatility and hallucination risk across open and closed backbones.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

Conclusion: Coexistence Is Now the Default Strategy

This year makes one conclusion unavoidable: Open-Source LLMs and Closed-Source LLMs represent different strategic levers, not competing endgames.

- Open models offer cost efficiency, sovereignty, and transparency

- Closed models offer polish, centralized safety, and predictable support

- Enterprises increasingly require both, coordinated through architecture rather than ideology

As Generative AI adoption accelerates, competitive advantage will flow to organizations that treat Large Language Models as interchangeable components within rigorously engineered systems. The winners will not be those who pick sides—but those who design for flexibility, economics, and governance from the start.

As enterprises navigate the real trade-offs between Open-Source LLMs and Closed-Source LLMs, execution—not ideology—becomes the differentiator. Makebot is one of the few platforms already aligned with this direction, offering a practical example of how organizations can operationalize hybrid LLM strategies with cost control, governance awareness, and production-grade reliability.

👉 www.makebot.ai | 📩 b2b@makebot.ai

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)