Redefining talent in the AI era: From Tool Proficiency to Enterprise Advantage

AI advantage now comes from talent systems—blending domain depth, human-AI design, and execution.

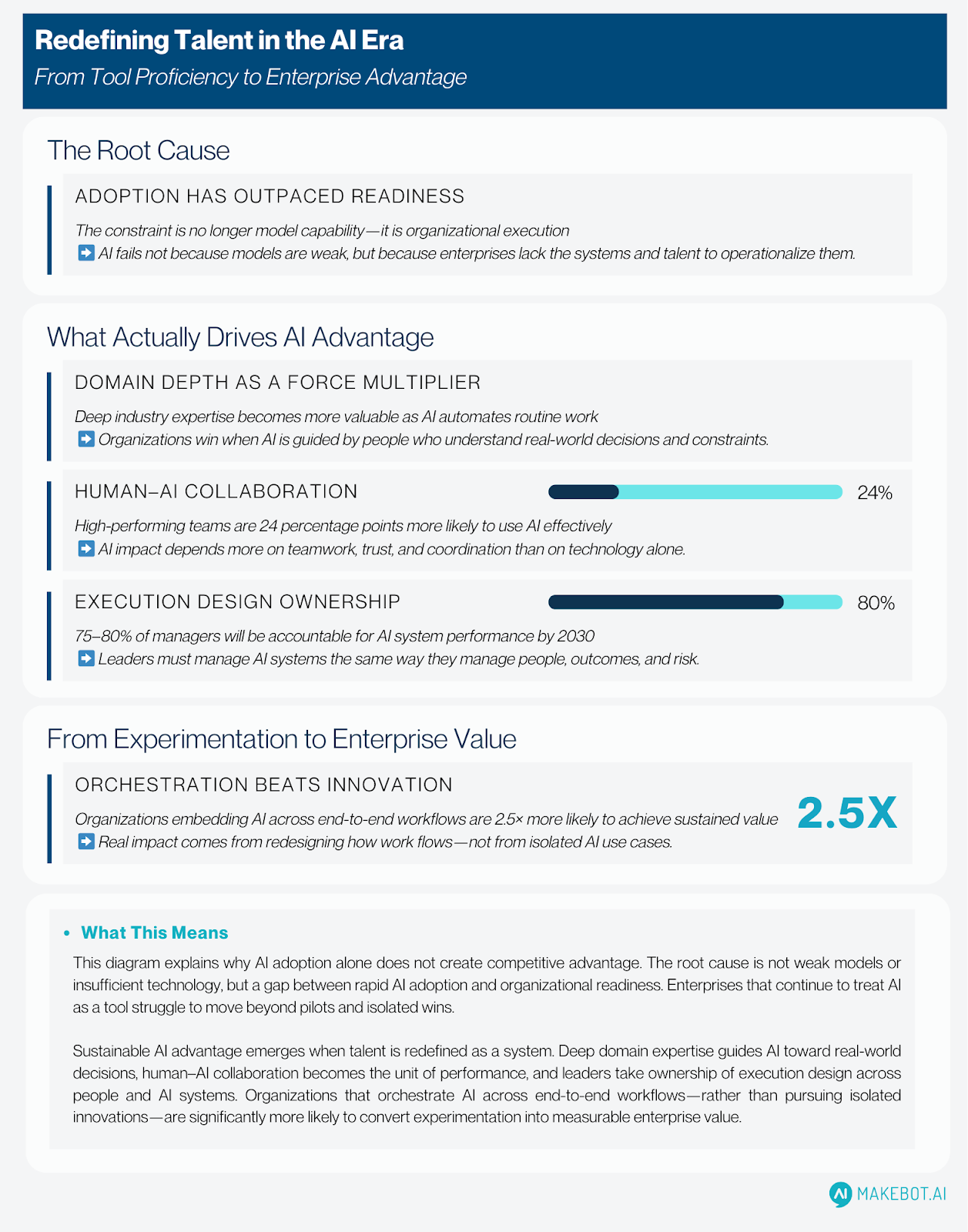

Enterprise leaders are facing a paradox. Artificial intelligence-particularly Generative AI and Large Language Models-has never been more accessible, yet measurable business impact remains elusive. According to McKinsey, 78% of organizations globally now use AI in at least one business function, but fewer than 39% report measurable EBIT impact from those deployments.

This disconnect is not marginal-it is structural. Deloitte estimates that despite $30–40 billion invested annually in generative AI, 95% of enterprises still report zero ROI at scale, underscoring that adoption has outpaced organizational readiness.

The constraint is no longer model capability or compute availability. It is the enterprise’s ability to translate AI potential into operational outcomes. In this context, Redefining talent in the AI era becomes a strategic necessity-not an HR initiative.

10 Key LLM Market Trends for 2026. Read more here!

The end of AI as a differentiator

AI tools are rapidly commoditizing. Independent benchmarks show that performance differences between leading foundation models have narrowed to single-digit margins for common enterprise tasks such as summarization, retrieval, and classification. At the same time, open-source ecosystems have reduced AI deployment costs by as much as 70% compared to proprietary stacks, according to the Linux Foundation.

Despite this accessibility, enterprise results have not improved at the same pace. Accenture finds that organizations spend three times more on AI technology than on workforce and operating-model transformation, even though companies that invest proportionally in people are 2.5× more likely to achieve enterprise-wide AI impact.

As a result, technical proficiency alone is no longer a competitive advantage. Skills such as prompt engineering, API integration, and model selection can be learned and replicated quickly. What remains defensible is the ability to embed AI into decision-critical workflows, where mistakes carry financial, regulatory, or safety consequences.

This imbalance explains a common pattern across industries:

- “Proofs of concept succeed”

- “Pilot KPIs look promising”

- “Enterprise deployments stall or fragment”

The breakdown is not technological—it is organizational.

Talent as a system, not a skillset

In the AI era, talent must be treated as a system-level capability—not a list of isolated skills. The organizations seeing real impact are not adding AI skills to existing roles; they are redesigning how work gets done.

McKinsey’s research on agentic operating models shows that companies that restructure work around human-AI systems achieve 30–40% higher productivity gains than those that simply layer AI onto legacy roles.

This shift reframes talent along three dimensions:

- Domain depth as a multiplier. As AI takes over repeatable tasks, deep industry expertise becomes more valuable, not less. Recent studies show that specialists with strong domain knowledge are being redeployed into higher-impact decision roles, while generic roles deliver diminishing marginal value in AI-enabled environments.

- Human-AI collaboration as the unit of performance. Performance is no longer driven by individuals or tools alone. Deloitte’s 2026 survey of 1,394 professionals found that high-performing teams were 24 percentage points more likely to use AI, yet their strongest predictor of success was not technology-it was trust, informed agility, and connected teaming.

- Execution design as a core capability. Leaders are now responsible for managing both people and AI agents. Gartner projects that 75–80% of managers will be accountable for AI system performance by 2030, requiring fluency in system behavior, failure modes, and escalation paths-not just model outputs.

OpenAI Report Reveals Accelerating Enterprise AI Adoption in Healthcare. Read here!

Why industry context matters more than model choice

The value of AI is most visible—and most fragile—in operationally complex environments, where decisions carry real financial, regulatory, or safety consequences. In these settings, model performance alone is insufficient. What matters most is whether AI systems are designed to operate within the realities of a specific industry context.

Palantir illustrates this principle clearly through its Forward Deployed Engineer (FDE) model. Rather than delivering AI models or platforms in isolation, Palantir organizes its teams around engineers who are embedded directly within customer environments.

FDEs are engineers who also function as on-the-ground operational experts. They develop a deep understanding of the customer’s industry structure, regulatory constraints, and real decision-making workflows, and then directly design, adapt, and iterate AI systems so they function reliably in production environments. This proximity allows AI systems to be shaped by real operational feedback—latency constraints, governance requirements, exception handling, and human decision loops—rather than abstract benchmarks or model scores.

This approach reflects Palantir’s core philosophy: the key differentiator is not which model is used, but how AI is designed to make decisions within a specific operational context. Models can be swapped, upgraded, or commoditized. Industry understanding, execution discipline, and workflow integration cannot.

From a talent perspective, the FDE model makes the implications explicit. High-impact AI organizations invest in people who can:

- Understand how decisions are actually made in real-world operations

- Translate regulatory, safety, and operational constraints into system design

- Continuously adapt AI workflows based on production feedback

Organizations that lack this kind of context-rich talent often fall into what Deloitte describes as “automation without advantage”—higher throughput and faster processes, but no meaningful improvement in customer outcomes, resilience, or risk management.

Palantir’s FDE model demonstrates why redefining talent in the AI era is not about hiring better model builders, but about cultivating human–AI system designers who can bridge technology, industry reality, and execution.

Read more on Palantir here!

Rethinking hiring, upskilling, and organizational design—together

Despite clear signals from the market, most enterprises are still hiring based on pre-AI job architectures, even as the nature of work is changing.

Accenture’s workforce research highlights a growing disconnect:

- 84% of executives plan to redesign roles around AI agents

- Yet only 20% are actively rethinking how work itself is structured

This gap explains why 75% of roles will require redesign, upskilling, or redeployment by 2030, rather than elimination.

Critically, research shows that training alone does not change how people work. In one longitudinal study, nearly 70% of employees skipped formal AI training and instead learned through trial and error or from colleagues—showing that AI skills stick only when learning is embedded directly into daily workflows.

Enterprises that succeed align three elements at the same time:

- Hiring criteria that combine deep domain expertise with AI fluency

- Incentives that reward experimentation, adoption, and outcome improvement

- Operating models designed for human–AI collaboration from the start

This coordinated approach—not isolated initiatives—is the foundation of AI-driven talent management.

Accenture and OpenAI expand their Enterprise AI partnership, accelerating global AI innovation. Read here!

From experimentation to enterprise value

Despite widespread experimentation, real business impact remains rare. Accenture reports that only 13% of organizations have generated meaningful enterprise-level value from generative AI, even though 36% say they have “scaled” AI solutions. In most cases, scale refers to broader deployment—not measurable impact.

Deloitte’s analysis points to orchestration, not innovation, as the main bottleneck. Organizations that embed AI across end-to-end business domains are 2.5× more likely to achieve sustained value than those running isolated use cases.

Achieving this shift requires talent that can:

- Design AI across entire value chains, not individual tasks

- Govern AI systems with clear operational and ethical accountability

- Continuously adjust how work is shared between humans and machines as capabilities evolve

These capabilities do not sit within traditional roles. They form a new operating layer inside the enterprise—one that determines whether AI delivers lasting value or remains a series of disconnected experiments.

Showcasing Korea’s AI Innovation: Makebot’s HybridRAG Framework Presented at SIGIR 2025 in Italy. More here!

What this means for Makebot

Makebot’s trajectory reflects this broader shift. From the outset, the company has focused on domains where AI must operate under real-world constraints—healthcare, commerce, transportation, and other complex environments.

Rather than treating AI as a standalone capability, Makebot has invested in:

- Industry-grounded problem definition

- Execution-aware AI Development

- Architectures optimized for cost, latency, and accuracy in production

This approach is exemplified by Makebot’s HybridRAG framework, presented at SIGIR 2025, which demonstrated 26%+ accuracy improvements, lower latency, and dramatically reduced inference costs in enterprise document intelligence use cases.

Ultimately, Redefining talent in the AI era is not about accumulating AI skills. It is about cultivating talent that integrates technology, industry insight, and execution discipline.

How a company defines AI-era talent ultimately defines its AI strategy—and determines whether AI becomes a durable advantage or another unrealized investment.

👉 www.makebot.ai | 📩 b2b@makebot.ai

Studies Reveal Generative AI Enhances Physician-Patient Communication

_2.png)

.jpg)